Poppy genome reveals evolution of opiates

The Department of Automation Science and Technology

The opium poppy has been a source of painkillers since Neolithic times. Attendant risks of addiction threaten many today. Guo et al. now deliver a draft of the opium poppy genome, which encompasses 2.72 gigabases assembled into 11 chromosomes and predicts more than 50,000 protein-coding genes. A particularly complex gene cluster contains many critical enzymes in the metabolic pathway that generates the alkaloid drugs noscapine and morphinan.

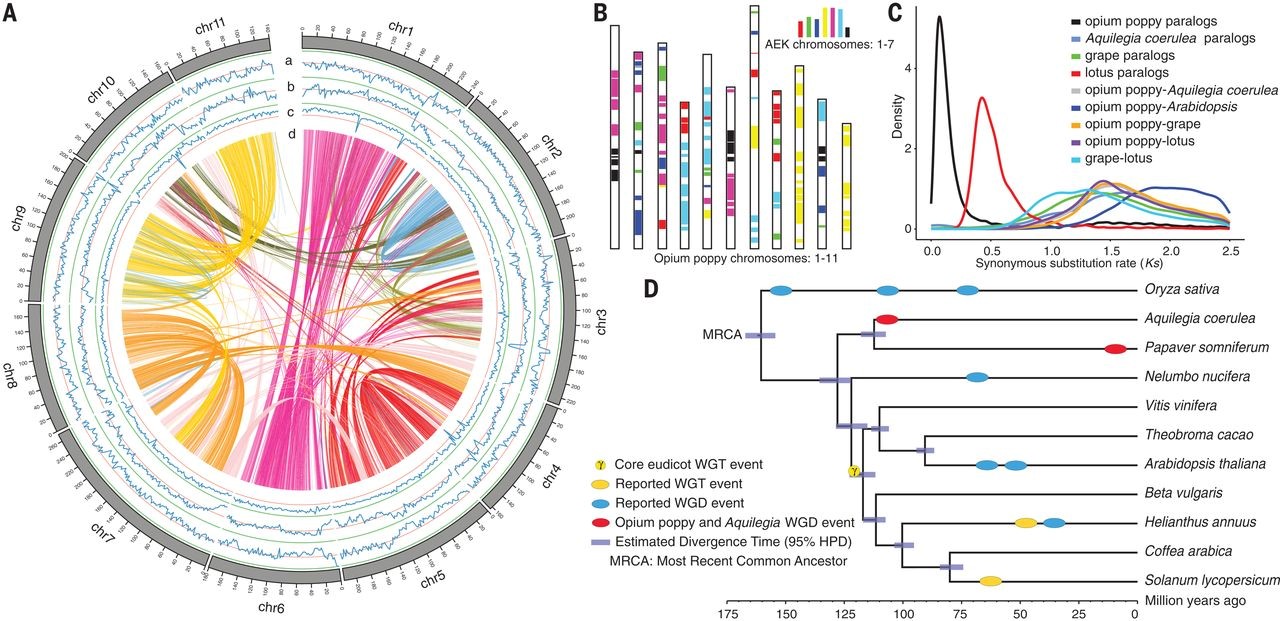

Opium poppy genome features and whole-genome duplication

(A) Characteristics of the 11 chromosomes of P. somniferum. Tracks a to c represent the distribution of gene density, repeat density, and GC density, respectively, with densities calculated in 2-Mb windows. Track d shows syntenic blocks. Band width is proportional to syntenic block size. (B) Comparison with ancestral eudicot karyotype (AEK) chromosomes reveals synteny. The syntenic AEK blocks are painted onto P. somniferum chromosomes. (C) Synonymous substitution rate (Ks) distributions of syntenic blocks for P. somniferum paralogs and orthologs with other eudicots are represented with colored lines as indicated. (D) Inferred phylogenetic tree with 48 single-copy orthologs of 11 species identified by OrthoFinder.

Unfalsified Visual Servoing for Simultaneous Object Recognition and Pose Tracking

The Department of Automation Science and Technology

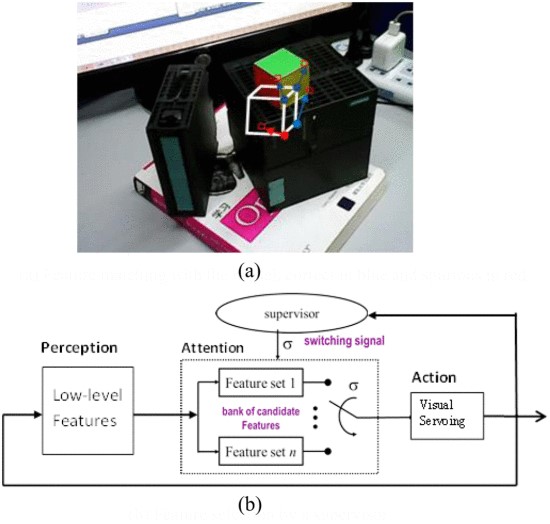

In a complex environment, simultaneous object recognition and tracking has been one of the challenging topics in computer vision and robotics. Current approaches are usually fragile due to spurious feature matching and local convergence for pose determination. Once a failure happens, these approaches lack a mechanism to recover automatically. In this paper, data-driven unfalsified control is proposed for solving this problem in visual servoing. It recognizes a target through matching image features with a 3-D model and then tracks them through dynamic visual servoing. The features can be falsified or unfalsified by a supervisory mechanism according to their tracking performance. Supervisory visual servoing is repeated until a consensus between the model and the selected features is reached, so that model recognition and object tracking are accomplished. Experiments show the effectiveness and robustness of the proposed algorithm to deal with matching and tracking failures caused by various disturbances, such as fast motion, occlusions, and illumination variation.

Unfalsified visual servoing of a 3-D model. (a) Feature matching with the model, correct in blue and spurious in red. (b) Feature selection by a supervisor.

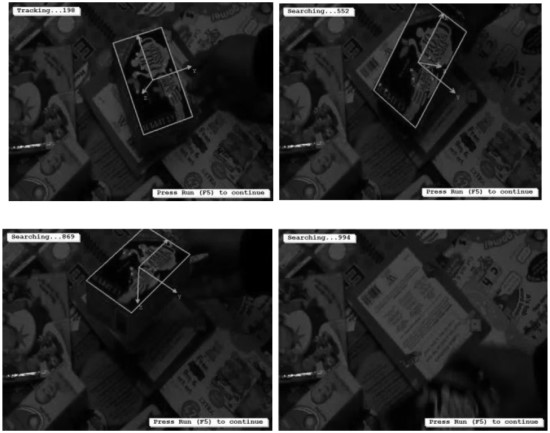

Tracking the tea box. The green coordinates system is the detected pose and the green rectangle is the top face of the tea box. The unfalsified interested points used in the visual servoing are shown as red crosses.

Due to various uncertainties existing in a real environment, visual tracking is inherently fragile and requires a fault tolerance design in order to recover from any failure automatically. An unfalsified adaptive controller for visual tracking of a 3-D object has been proposed to deal with unreliable features extracted from an image. The proposed controller includes locally stable tracking control supervised by a switching mechanism for feature selection and global relocalization for recovery from tracking failure. The extracted features in the image can be falsified or unfalsified for tracking control by evaluation of their tracking history. Since the unfalsified control is completely data-driven, it can switch features in or out the control loop dynamically for reliable feature selection or fault rectification. From a cognitive point of view, the proposed algorithm provides a low-level servo controller with some intelligent aspects, such as visual attention and context-awareness. The proposed algorithm was implemented for visual tracking of modelled objects under adverse conditions, such as fast motion, cluttered background, occlusions, illumination variation, and movement out of the field-of-view. Recognition and tracking process coordinated by the supervisor were demonstrated and satisfied tracking performance was achieved.

Group dynamics in discussing incidental topics over online social networks

The Department of Automation Science and Technology

The groups discussing incidental popular topics over online social networks have recently become a research focus. By analyzing some fundamental relationships in these groups, we find that the traditional models are not suitable for describing the group dynamics of incidental topics. In this article we analyze the dynamics of online groups discussing incidental popular topics and present a new model for predicting the dynamic sizes of incidental topic groups. We discover that the dynamic sizes of incidental topic groups follow a heavy-tailed distribution. Based on this finding, we develop an adaptive parametric method for predicting the dynamics of this type of group. The model presented in the article is validated using actual data from LiveJournal and Sohu blogs. The empirical results show that the model can effectively predict the dynamic characteristics of incidental topic groups over both short and long timescales, and outperforms the SIR model. We conclude by offering two strategies for promoting the group popularity of incidental topics.

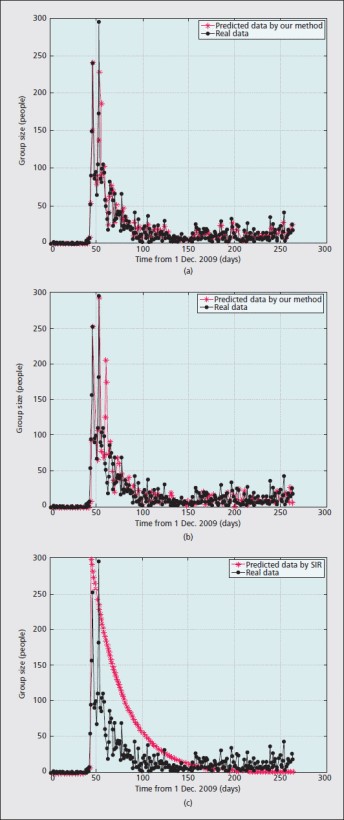

The predicted group sizes for incidental topics from LiveJournal: a) one-day advance prediction by our method; b) seven-day advance prediction by our method; c) prediction results by the SIR model.

we analyze the group dynamics of discussing incidental topics and present a method for predicting dynamic group sizes. We found that the widely used epidemic model and other traditional models are not suitable for describing the group dynamics of incidental topic discussion, since the assumptions made in the other traditional models are not valid based on our analysis of actual data, and the meaningful parameter estimation of the epidemic model is quite difficult for incidental topics. We also found that the dynamic group sizes of incidental topics follow the heavy-tailed distribution. Based on this finding, we developed an adaptive parametric model for predicting the group dynamics in discussing incidental topics. This model can be applied to compute the d-step advance by fitting the group size curve in logarithm form at each prediction step.

The prediction model developed is validated by actual data from LiveJournal and Sohu blogs. The empirical results show that this model can effectively predict the active period and number of total users participating in the discussion over both short and long prediction periods, and outperforms the SIR model.

Two strategies are proposed for promoting group popularity in discussing incidental topics. First, publicizing more related news can attract more users to topic discussions within the same day. In addition, the postings should be recommended not only to one-hop neighbors but also to two-hop neighbors.

Enhanced Hidden Moving Target Defense in Smart Grids

The Department of Automation Science and Technology

Recent research has proposed a moving target defense (MTD) approach that actively changes transmission line susceptance to preclude stealthy false data injection (FDI) attacks against the state estimation of a smart grid. However, existing studies were often conducted under a weak adversarial setting, in that they ignore the possibility that alert attackers can also try to detect the activation of MTD before they launch the FDI attacks. We call this new threat as parameter confirming-first (PCF) FDI. To improve the stealthiness of MTD, we propose a hidden MTD approach that cannot be detected by the attackers and prove its equivalence to an MTD that maintains the power flows of the whole grid. Moreover, we analyze the completeness of MTD and show that any hidden MTD is incomplete in that FDI attacks may bypass the hidden MTD opportunistically. This result suggests that the stealthiness and completeness are two conflicting goals in MTD design. Finally, we propose an approach to enhancing the hidden MTD against a class of highly structured FDI attacks. We also discuss the MTD’s operational costs under the dc and ac models. We conduct simulations to show the effectiveness of the hidden MTD against PCF-FDI attacks under realistic settings.

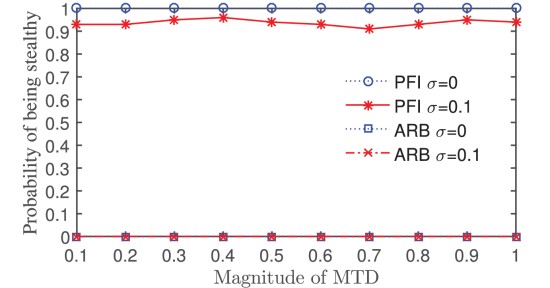

PFI-MTD vs. arbitrary MTD (ARB) and impact of noises.

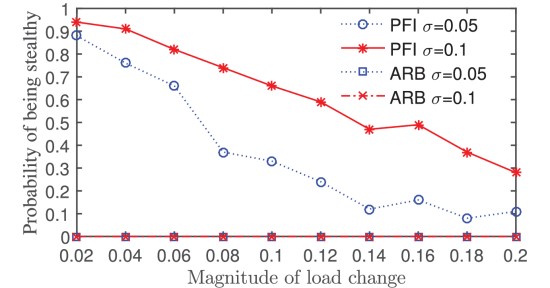

Impact of variable loads and noises on PFI-MTD.

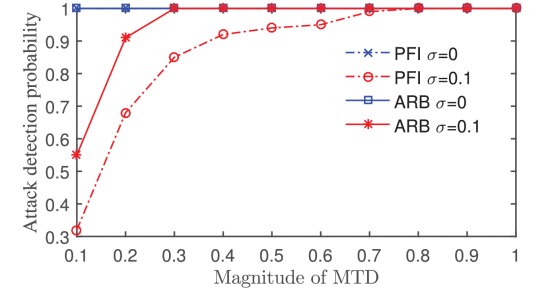

Attack detection probability of PFI-MTD and arbitrary MTD.

We studies PCF-FDI that tries to detect the activation of MTD before launching an FDI attack. To defend against PCF-FDI, we propose a hidden MTD approach that maintains the power flows and develop an algorithm to construct the hidden MTD. We analyze the completeness of MTD and show that the stealthiness and completeness are two conflicting goals in MTD design. Moreover, we propose an approach to enhancing incomplete MTD (including hidden MTD) against a class of highly structured FDI attacks. Finally, we analyze MTD’s operational cost and present numerical results. Simulations are conducted to compare the stealthiness and completeness of our hidden MTD approach with the existing arbitrary MTD approach.

A high-order representation and classification method for transcription factor binding sites recognition in Escherichia coli

The Department of Automation Science and Technology

Identifying transcription factors binding sites (TFBSs) plays an important role in understanding gene regulatory processes. The underlying mechanism of the specific binding for transcription factors (TFs) is still poorly understood. Previous machine learning-based approaches to identifying TFBSs commonly map a known TFBS to a one-dimensional vector using its physicochemical properties. However, when the dimension-sample rate is large (i.e., number of dimensions/number of samples), concatenating different physicochemical properties to a one-dimensional vector not only is likely to lose some structural information, but also poses significant challenges to recognition methods. Materials and method: In this paper, we introduce a purely geometric representation method, tensor (also called multidimensional array), to represent TFs using their physicochemical properties. Accompanying the multidimensional array representation, we also develop a tensor-based recognition method, tensor partial least squares classifier (abbreviated as TPLSC). Intuitively, multidimensional arrays enable borrowing more information than one-dimensional arrays. The performance of each method is evaluated by average F-measure on 51 Escherichia coli TFs from RegulonDB database. Results: In our first experiment, the results show that multiple nucleotide properties can obtain more power than dinucleotide properties. In the second experiment, the results demonstrate that our method can gain increased prediction power, roughly 33% improvements more than the best result from existing methods. Conclusion: The representation method for TFs is an important step in TFBSs recognition. We illustrate the benefits of this representation on real data application via a series of experiments. This method can gain further insights into the mechanism of TF binding and be of great use for metabolic engineering applications.

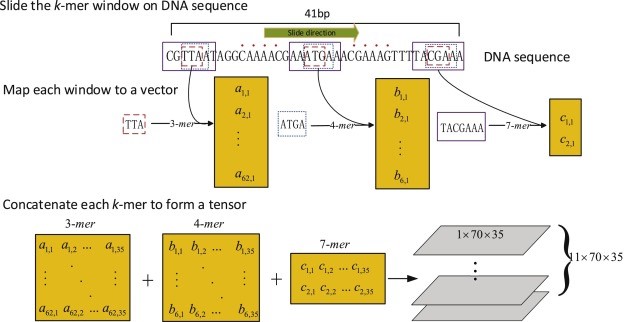

Schematic diagram of the process from a raw DNA sequence to a tensor. This is an example for one of binding sites from AgaR TF. The total number of properties for 3-mers, 4-mers, and 7-mers are 62, 6, and 2, respectively. We concatenated the k-mers with the same length (35) to form a tensor (1 × 70 × 35). The total number of binding sites for AgaR TF is 11; therefore, we can obtain a tensor (11 × 70 × 35) for AgaR TF.

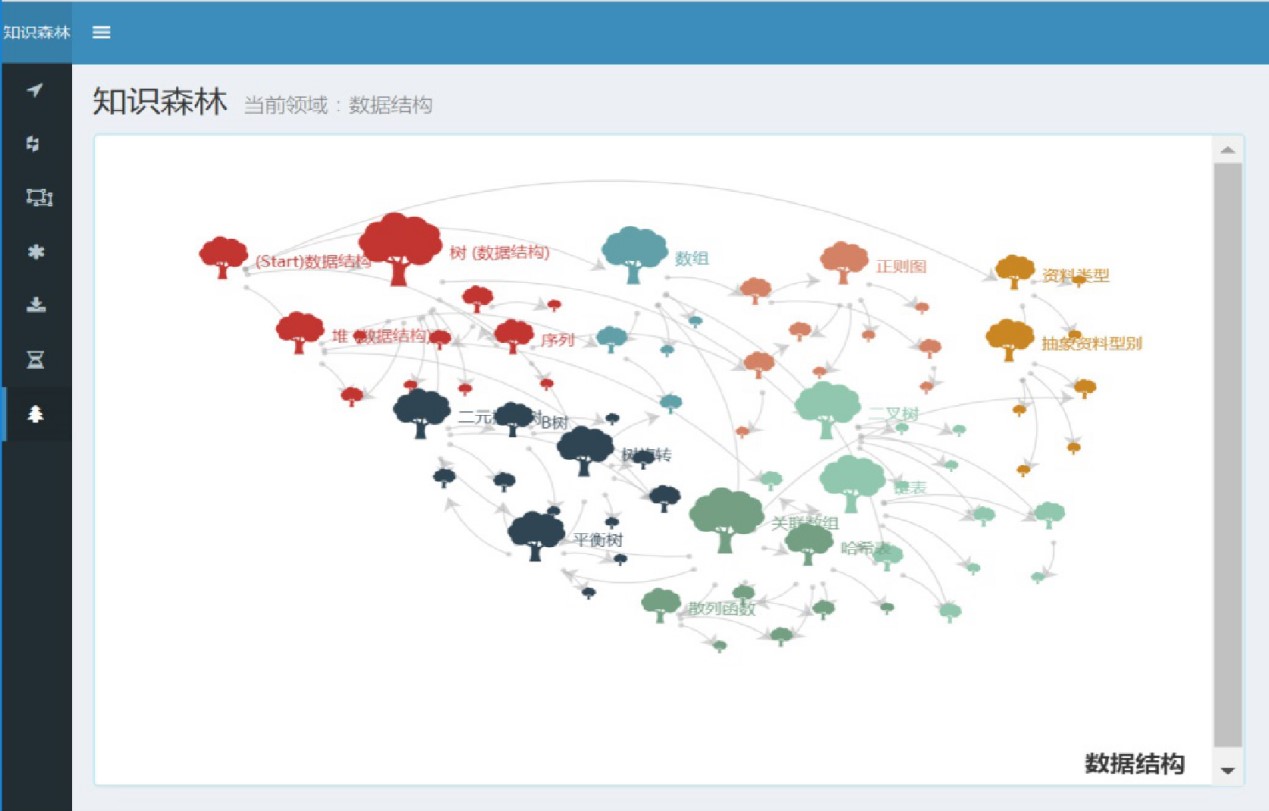

Mining Suspicious Tax Evasion Groups in Big Data

The Department of Automation Science and Technology

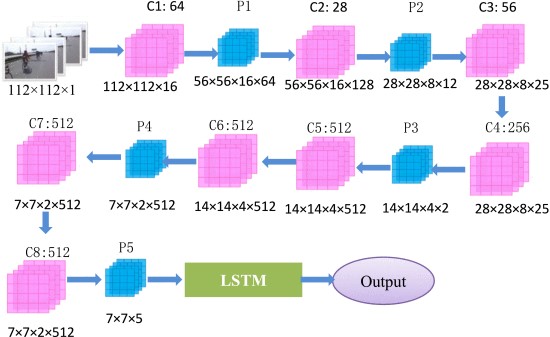

There is evidence that an increasing number of enterprises plot together to evade tax in an unperceived way. At the same time, the taxation information related data is a classic kind of big data. These issues challenge the effectiveness of traditional data mining-based tax evasion detection methods. To address this problem, we first investigate the classic tax evasion cases, and employ a graph-based method to characterize their property that describes two suspicious relationship trails with a same antecedent node behind an Interest-Affiliated Transaction (IAT). Next, we propose a Colored Network-Based Model (CNBM) for characterizing economic behaviors, social relationships, and the IATs between taxpayers, and generating a Taxpayer Interest Interacted Network (TPIIN). To accomplish the tax evasion detection task by discovering suspicious groups in a TPIIN, methods for building a patterns tree and matching component patterns are introduced and the completeness of the methods based on graph theory is presented. Then, we describe an experiment based on real data and a simulated network. The experimental results show that our proposed method greatly improves the efficiency of tax evasion detection, as well as provides a clear explanation of the tax evasion behaviors of taxpayer groups.

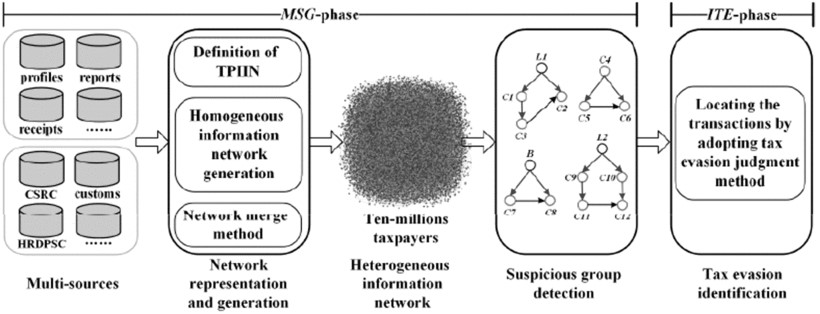

Flow of tax evasion identification.

Schematic procedure of multi-network fusion.

The proposed method adopts a heterogeneous information network to describe economic behaviors among taxpayers and casts a new light on the tax evasion detection issue. It not only utilizes multiple homogeneous relationships in big data to form the heterogeneous information network, but also maximally utilizes the advantage of trail-based pattern recognition to select the suspicious groups. Through multi-social relationships fusion and reduction, we simplify the heterogeneous information network into a colored model with two node colors and two edge colors. Moreover, after investigating three cases, we conclude that to identify two suspicious relationship paths behind an IAT is a core problem of detecting suspicious groups. The advantage of the proposed method is that it does not lead to a combinatorial explosion of subgraphs. This improves the efficiency of detecting the IATs-based tax evasion. In contrast with other transaction-based data mining techniques of tax evasion detection, the experimental results show that detecting the suspicious relationships behind a trading relationship firstly scales down the number of suspects (companies) and then the number of suspicious transactions. Furthermore, the mined results are related to many kinds of behavior patterns in illegal tax arrangement, which are intuitive for tax investigators and provide a good explanation of how tax evasion works. Our proposed method has been incorporated into a tax source monitoring and management system employed in several provinces of mainland of China.

In future work, the proposed method will be extended to deal with the situation of directed circles in investment graph, and the weight computation methods of edges during a build-in phase of TPIIN in order to help identify the tax evaders. In addition, the possibility of the introduction of more relationships into the heterogeneous information network will be investigated. Moreover, with the increasing of the size of the TPIIN, the parallel and distributed computation techniques-oriented graph processing will be employed to the proposed method to improve its efficiency and adaptability.

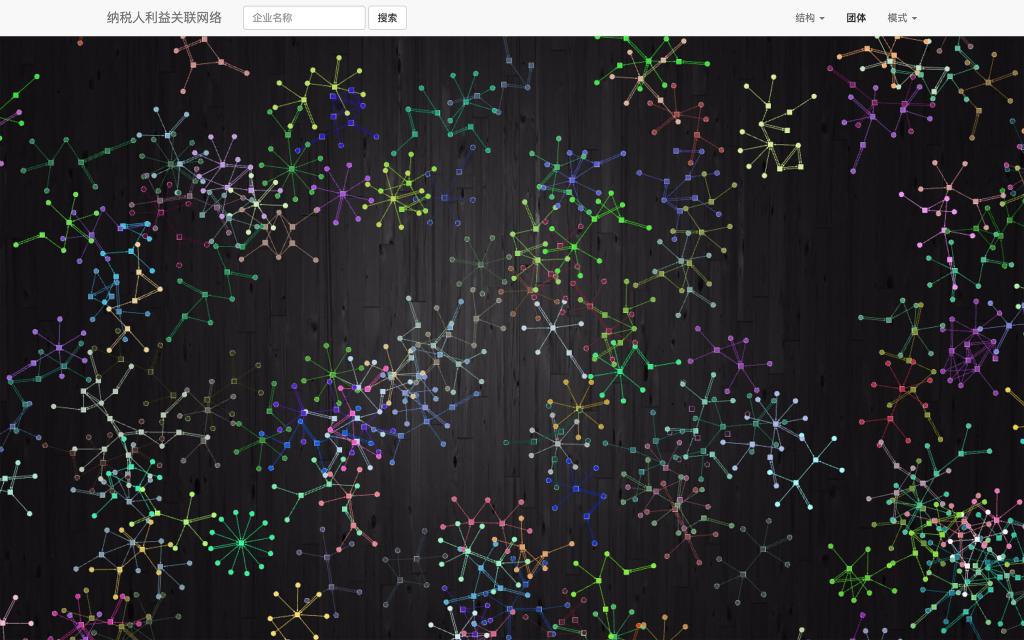

Deep Learning for Fall Detection: Three-Dimensional CNN Combined With LSTM on Video Kinematic Data

The Department of Automation Science and Technology

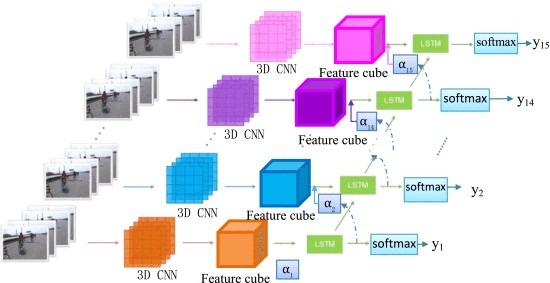

Fall detection is an important public healthcare problem. Timely detection could enable instant delivery of medical service to the injured. A popular nonintrusive solution for fall detection is based on videos obtained through ambient camera, and the corresponding methods usually require a large dataset to train a classifier and are inclined to be influenced by the image quality. However, it is hard to collect fall data and instead simulated falls are recorded to construct the training dataset, which is restricted to limited quantity. To address these problems, a three-dimensional convolutional neural network (3-D CNN) based method for fall detection is developed, which only uses video kinematic data to train an automatic feature extractor and could circumvent the requirement for large fall dataset of deep learning solution. 2-D CNN could only encode spatial information, and the employed 3-D convolution could extract motion feature from temporal sequence, which is important for fall detection. To further locate the region of interest in each frame, a long short-term memory (LSTM) based spatial visual attention scheme is incorporated. Sports dataset Sports-1 M with no fall examples is employed to train the 3-D CNN, which is then combined with LSTM to train a classifier with fall dataset. Experiments have verified the proposed scheme on fall detection benchmark with high accuracy as 100%. Superior performance has also been obtained on other activity databases.

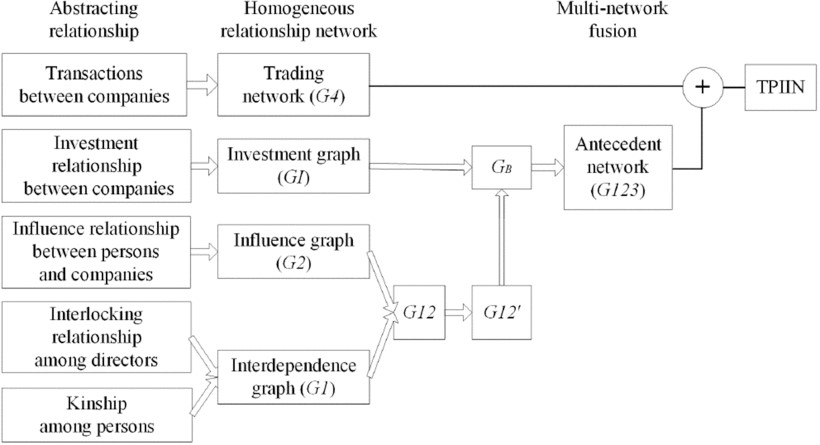

Structure of the 3D convolutional neural network.

Illustration of visual attention guided 3D CNN.

Illustration of the splitting of the input video.

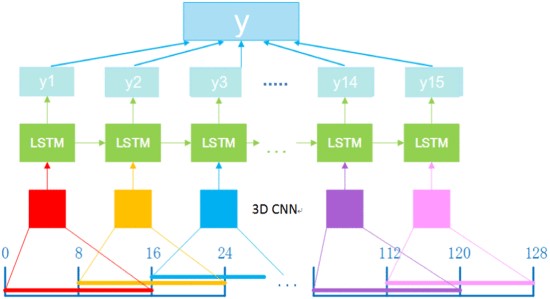

Key techniques in building knowledge forest and the applications in smart education.

The Department of Computer Science and Technology

The properties of multi-source fragmented knowledge, such as multi-modality, unordered structure and unilateral content, bring obstacles when applying traditional knowledge graph to present such information. To address this issue, we proposes a novel knowledge graph model, namely knowledge forest, which achieved a hierarchical and faceted formalized mechanism with adjustable grain size, as “fragmented knowledge---thematic faceted tree---knowledge forest”. The innovations and contributions can be concluded as follows:

Proposing a unified embedding model and a mixed representation learning method, to solve the problems of feature mapping and unified representation between different modality information, such as text, image and video.

Inventing a thematic faceted spanning tree algorithm based on topological properties to address the problems of fitting fragmented knowledge into faceted tree, and actualizing the tree spanning from fragmented information.

Developing a knowledge forest construction approach based on topology fusion and modality complementation to solve the problems of mining sparse cognitive relationships between different themes, and realizing the construction from faceted trees to knowledge forest.

69 papers related to the research mentioned above have been published by us in top-tier journals and conferences, such as TKDE and IJCAI, and the total citations of our papers from Google Scholar are over 1810.

Application:

Developed a fragmented knowledge fusion system, and applying it to the ‘MOOC China’ platform. 1084 courses have been converged into the platform, meanwhile, a knowledge forest with approximately 70,000 faceted trees form 8 subjects with 202 course have been built up in a human-machine combination approach using our system, and first published on the international LOD in 2014. From 2016, collaborating with the International Engineering Technology Knowledge Center of Chinese Academy of Engineering, we have started online training for 42 countries in the “Belt and Road initiative”.

The results and application of our research won the second prize of State Science and Technology Progress in 2010, and the second prize of National Teaching Achievements in 2009 and 2018.

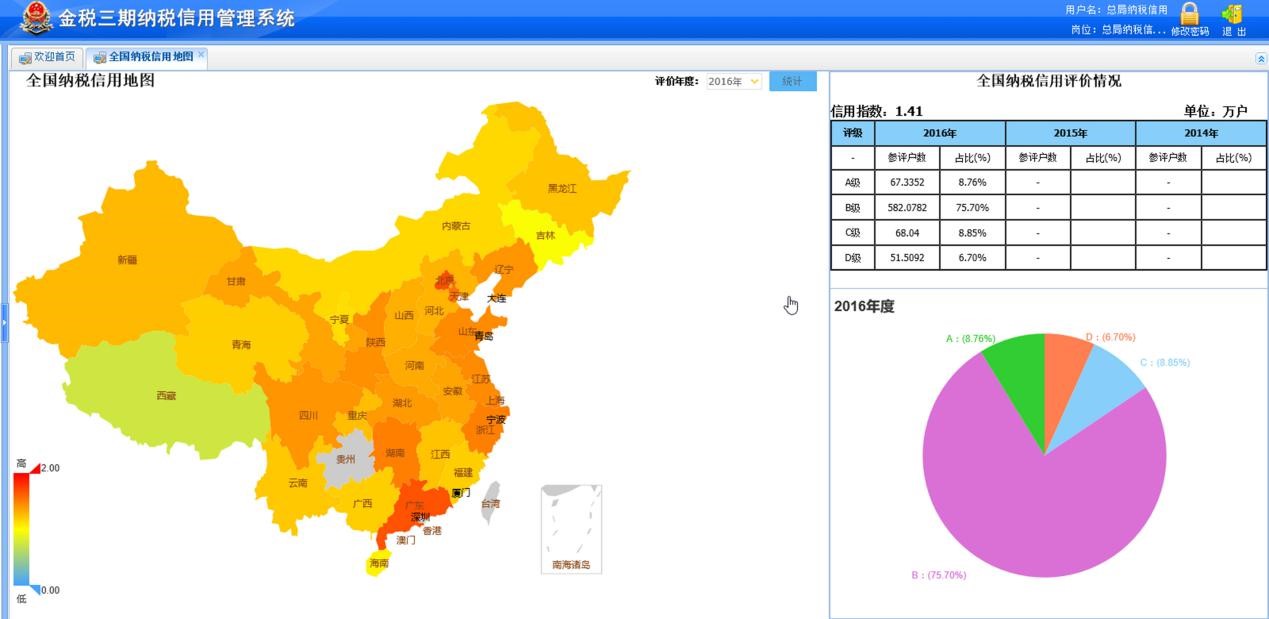

Key methods and applications of national tax big data computation and service.

The Department of Computer Science and Technology

Innovation and contribution:

Applying complex network theory on tax data analysis, we proposed a concept and model of Taxpayer Interests Network (TPIN), which made a breakthrough in association and fusion modeling for multi-source, high dimensional and time varying tax data, and solved the problems of feature representation and quantitative computation of complex and diverse interest relationships among taxpayers. The innovations and contributions can be concluded as follows:

Proposing a trinity authenticity detection method based on the invoicing object, content and behavior in transaction big data, and solving the long-term distress issues of false, inflated and duplicate invoices.

Inventing a three-stage invoice fraud and tax evasion discovery method as interests group discovery, suspicious group localization and evidence chain generation. Our method plays an important role in increasing the state's fiscal revenue and promoting tax equity as 146 illegal interests transfer modes of invoice fraud and tax evasion have been discovered.

36 papers related to our researches have been published by us in top-level journals and conferences, such as AI and AAAI, and some of them have been awarded as the best paper and best system demonstration. The total citations of our papers from Google Scholar are over 560.

Application:

Developed a series of software products for National Tax Data Analysis Platform, which have been practically implemented in State Administration of Taxation and all 70 provincial national and local tax bureaus, and provided services to approximately 50 million enterprises in the country. By 2017, a total of 3,055,000 risky taxpayers and 287.3 billion yuan of tax revenue had been discovered and recovered respectively, and the effect continues. The State Administration of Taxation identifies our work as the “The only implementation plan in the country”, “Greatly improve the automation and intelligentialize the level of tax revenue risks identification”. In November 2016, the National Natural Science Foundation of China reported that our method “Effectively improve the credibility of China's e-tax software system and the ability of inspecting invoice fraud and tax evasion”.

The results and applications of our research won the second prize of the 2017 State Science and Technology Progress Award, and rewarded as excellence in the 2016 China Patent Award.

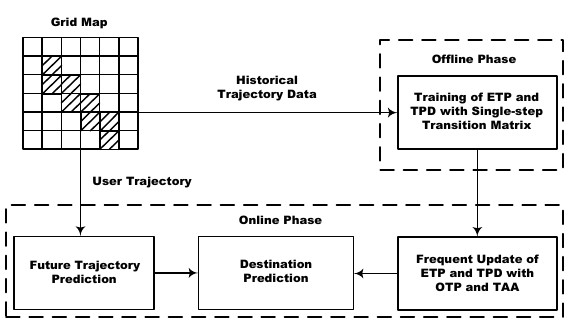

An Efficient Destination Prediction Approach Based on Future Trajectory Prediction and Transition Matrix Optimization

The Department of Computer Science and Technology

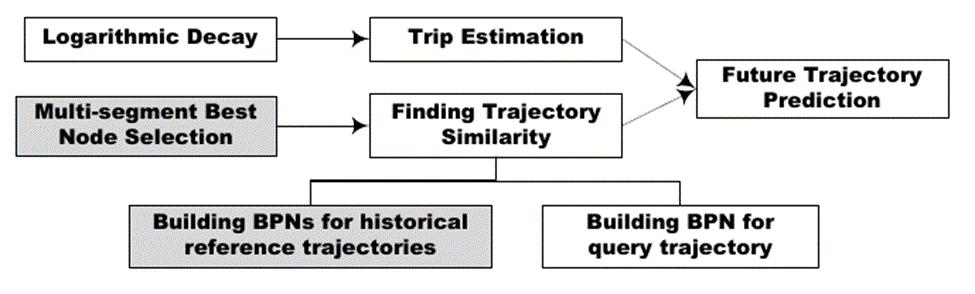

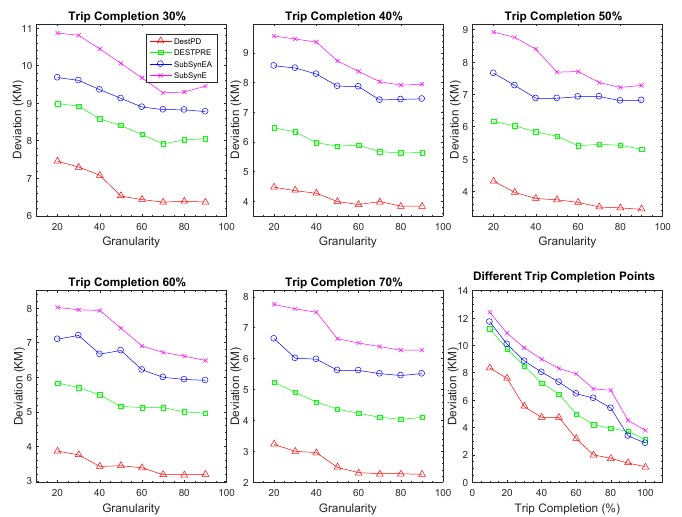

Destination prediction is an essential task in various mobile applications and up to now many methods have been proposed. However, existing methods usually suffer from the problems of heavy computational burden, data sparsity and low coverage. Therefore a novel approach named DestPD is proposed to tackle the aforementioned problems. Differing from an earlier approach that only considers the starting and current location of a partial trip, DestPD first determines the most likely future location and then predicts the destination. It comprises two phases, the offline training and the online prediction. During the offline training, transition probabilities between two locations are obtained via Markov transition matrix multiplication. In order to improve the efficiency of matrix multiplication, we propose two data constructs, Efficient Transition Probability (ETP) and Transition Probabilities with Detours (TPD). They are capable of pinpointing the minimum amount of needed computation. During the online prediction, we design Obligatory Update Point (OUP) and Transition Affected Area (TAA) to accelerate the frequent update of ETP and TPD for recomputing the transition probabilities. Moreover, a new future trajectory prediction approach is devised. It captures the most recent movement based on a query trajectory. It consists of two components: similarity finding through Best Path Notation (BPN) and best node selection. Our novel BPN similarity finding scheme keeps tracks of the nodes that induces inefficiency and then finds similarity fast based on these nodes. And it is particularly suitable for trajectories with overlapping segments. Finally the destination is predicted by combining transition probabilities and the most probable future location through Bayesian reasoning. The DestPD method is proved to achieve one order of cut in both time and space complexity. Furthermore, the experimental results on real-world and synthetic datasets have shown that DestPD consistently surpasses the state-of-the-art methods in terms of both efficiency (approximately over 100 times faster) and accuracy.

The recent decade has witnessed the rise of ubiquitous smart devices that boast a multitude of sensors. Among them location related sensors play an essential role in enabling solutions concerning urban computing. Specifically, geolocation related applications have long been a vibrant branch of extensive studies on mobile computing since its inception at the turn of this century. The ubiquity of hand-held smart gadgets has profoundly transformed our life on the go. Such devices are usually accompanied by the built-in feature of positioning enabled by GPS which sometimes works jointly with WIFI and RFID to enhance precision. Regarding the investigation of human movement, researchers have reaped immense benefit by clustering popular locations and analyzing historical trajectories generated by these devices.

Destination prediction is a popular theme of mobile computing and it is broadly employed in a variety of fields, such as mobile advertising, offering cognitive assistance, fastest path computation, mobile recommender system, human mobility study, popular routes discovery, driving experience enhancement etc. In these applications, based on the starting location and the current location of a partial trip already traveled by a user, destination prediction methods seek to find out the user's destination of the whole journey.

Most earlier work employ Markov model and Bayesian inference to predict the final destination. Some of them incorporate information other than just historical trajectory data, such as geographic cover, user preference, best route and weather condition. However, such information may be very difficult to obtain in practice. Furthermore, some methods usually tend to underutilize the available historical data, such as SubSynEA. SubSynEA takes only the starting location and the current location to predict the final destination. It decomposes and then synthesizes the historical trajectories using Bayesian inference. This method may obtain inaccurate prediction as it ignores the importance of the most recent movement. Moreover, some methods cannot deal with the data sparsity problem very well as some locations are never covered in the historical data, such as DESTPRE. DESTPRE builds a sophisticated index and then uses partial matching to predict the destination and therefore it may fail to report a destination as some paths may not be present in the historical trajectory data.

To address the aforementioned problems, we propose DestPD which is composed of the offline training phase and the online prediction phase. We make the following contributions in this paper:

1. We propose a novel approach DestPD for destination prediction. Instead of only considering the starting and current location of an ongoing trip, DestPD first predicts the most probable future location and then reports the destination based on that. It directly targets the route connecting two endpoints, which mitigates the data underutilization problem inherent in current methods.

2. To improve the computational efficiency, we also design some data constructs: Two data constructs ETP and TPD are proposed for training. Since frequent update of the model is necessary to enhance prediction accuracy, we devise OUP and TAA that reduce its computational cost.

3. We develop a new framework for future trajectory prediction: A new method finding similarity of trajectory is proposed. The method is more efficient than earlier ones and aims at two trajectories that share overlapping sub paths. Overlap usually occurs in very similar trajectories and so we are more interested in the nuances between them. To locate the nodes that are important to determine possible trajectory direction, we introduce an efficient best node selection technique based on dynamic programming. Compared with the naive approach of exponential time and space complexity, it requires only scanning all the nodes in one pass.

4. The experimental results on real-world and synthetic datasets show that DestPD is more accurate than three state-of-the-art methods. Furthermore, DestPD achieves higher coverage and cuts one order of magnitude in both time and space complexity.

The overview of our DestPD approach.

A sketch of our framework for future trajectory prediction. The shaded boxes indicate offline processing.

Average deviation from destination at the 30% - 70% completion point and at the different trip completion points.

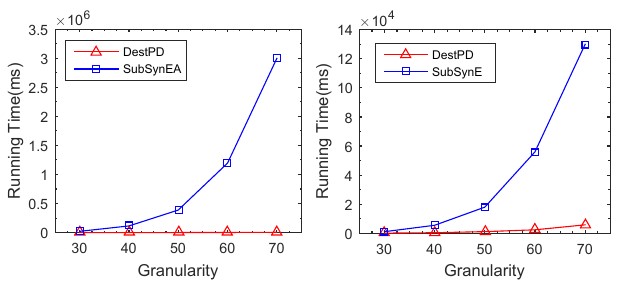

Training time of DestPD, SubSynEA and SubSynE.

A novel ensemble method for classifying imbalanced data

The Department of Computer Science and Technology

The class imbalance problems have been reported to severely hinder classification performance of

many standard learning algorithms, and have attracted a great deal of attention from researchers of different fields. Therefore, a number of methods, such as sampling methods, cost-sensitive learning methods, and bagging and boosting based ensemble methods, have been proposed to solve these problems. However, these conventional class imbalance handling methods might suffer from the loss of potentially useful information, unexpected mistakes or increasing the likelihood of overfitting because they may alter the original data distribution.

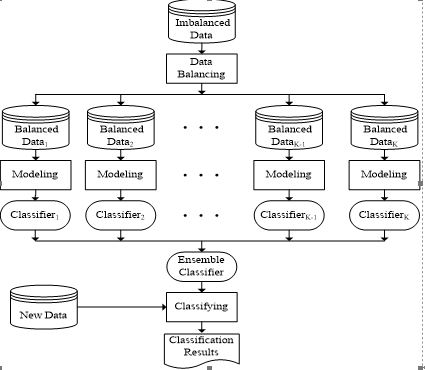

We propose a novel ensemble method, which firstly converts an imbalanced data set into multiple balanced ones and then builds a number of classifiers on these multiple data with a specific classification algorithm. Finally, the classification results of these classifiers for new data are combined by a specific ensemble rule. Figure 1 provides the details of the proposed ensemble method.

Figure 1:Proposed ensemble method for handling imbalanced data

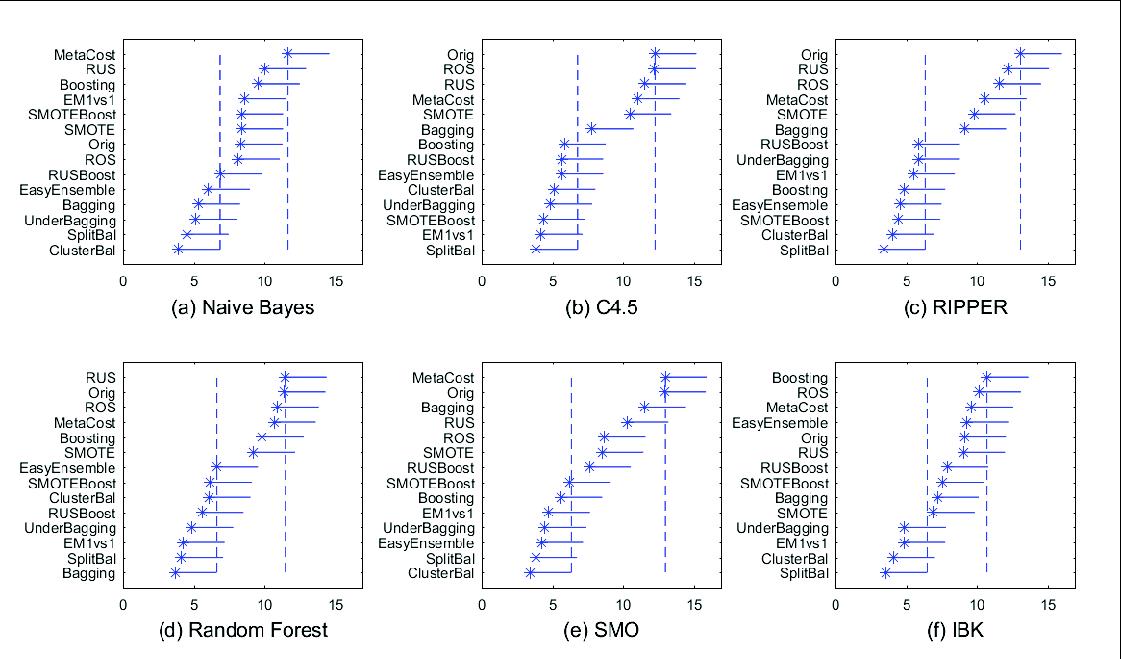

In the empirical study, different class imbalance data handling methods including three conventional sampling methods, one cost-sensitive learning method, six Bagging and Boosting based ensemble methods, and our previous method EM1vs1 were compared with the proposed method. The

experimental results on 46 imbalanced data sets show that our proposed method is usually superior to the conventional imbalance data handling methods, as shown in Figure 2.

Figure 2: Results of the comparisons of 14 models using Nemenyi post hoc test

Multifunctional ferroelectric materials and devices

The School of Electronic Science and Engineering

It mainly focuses on functional materials and devices based on polar dielectrics, developing its applications in electronics and optoelectronics. The research includes the synthesis and preparation of materials, composition and structure, properties and applications, and their interrelationships; Research focuses on dielectric, ferroelectric, piezoelectric, pyroelectric, electro-optic, ferromagnetic, multiferroic and advanced functional materials and devices for nonlinear performance and sensing and drive performance. Taking a relaxor ferroelectric single crystal as an example, its dielectric, piezoelectric, pyroelectric, electro-optic and other properties are multiplied or even orders of magnitude higher than conventional materials. Based on this type of material, new piezoelectric devices can be developed for ocean observation and polar observation to improve the ultimate sensitivity and detection distance of the detection system. New electro-optical conversion devices can be designed to improve conversion efficiency and detection sensitivity. It can develop high-voltage and high-power energy storage capacitors for high-power pulse technology such as controlled nuclear fusion to improve system efficiency.

Major scientific and technological problems to be solved:

·The key technologies of ferroelectric single crystal devices for polar ocean observation

·Developing design principles and design methods for ferroelectric single crystals for electro-optical information conversion devices

·The key technology of high-voltage and high-power dielectric energy storage devices.

Third and fourth generation wide band gap semiconductor materials and devices

The School of Electronic Science and Engineering

Wide-bandgap semiconductor materials typified by silicon carbide (SiC) and gallium nitride (GaN) are generally called the third-generation semiconductor materials. Ultra-wide bandgap semiconductor materials such as diamond are called the fourth-generation semiconductor materials,which are also known as the ultimate semiconductor. The third and fourth generation semiconductor materials have large forbidden band width, high breakdown field strength, high thermal conductivity, high electron saturation rate, strong radiation resistance and good biocompatibility (referred to as diamond). So, it's suitable for high temperature, high frequency, radiation resistant, high-power devices and energy efficient lighting devices.

Major scientific and technological problems to be solved:

·The epitaxial growth process technology of wide-bandgap semiconductors

·The process technology of heterojunction devices of wide-bandgap semiconductors

·The technological technology of new ultra-high-power electronic devices.

·Reducing the defect density of inch-level single crystal diamond substrates,

·Developing electronic device-level diamond n-type doping, heterojunction epitaxy and heterojunction, biological devices, and all-solid-state functional quantum devices.

Ultrafast photonics and quantum information technology

The School of Electronic Science and Engineering

The connotation of the second quantum revolution is the research and development of quantum technology and devices which are all based on quantum properties. These technologies and devices follow the laws of quantum mechanics. They use quantum states as the unit. The generation, transmission, storage, processing, and manipulation of information are all based on quantum. Their information function far exceeds the corresponding classic devices, which can break through the physical limits of existing information technology, and will play a great role in information processing speed, information security, information capacity, information detection, etc. This new technology will bring about earth-shaking changes to human society. The research includes new quantum technologies based on quantum mechanics and quantum information theory, and develops quantum devices based on quantum properties. The research focuses on quantum detection and recognition technology, new quantum control technology, high-brightness entangled light source, high-resolution transient imaging and other key technologies.

Major scientific and technical issues to be solved:

·Physical mechanisms of daylight and sky-wave quantum imaging, underwater target quantum imaging, and microwave-photon up-conversion.

Wireless physical layer security: From theory to practice

The Department of Information and Communications Engineering

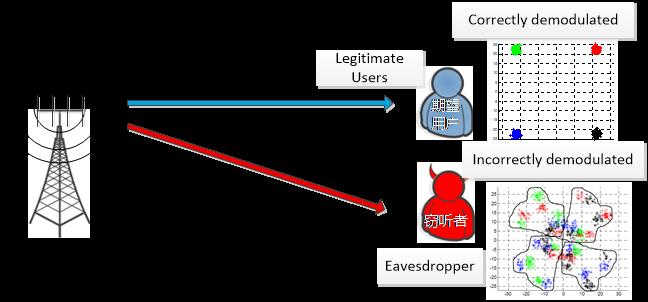

The openness of the wireless physical-layer media and the broadcast characteristics of electromagnetic signal propagation make the security of wireless communication face more severe challenges than the wired communication, which becomes the main bottleneck of communication security. The wireless physical-layer secure transmission theory based on the "Information Theoretic Security" criterion is an effective approach to deal with the characteristics of wireless transmission media. The basic theory of wireless physical-layer secure transmission is established on the strict definitions and proofs of information theory. By designing and optimizing signals, the performance limit of the secure transmission, i.e. secure capacity, can be theoretically approached without relying on the secret communication parameter or shared secret keys. In-depth research on information theory security in recent years shows that fully utilizing the spatial dimension resources of multi-antenna channels, along with constructing and expanding the channel superiority of target users, has the potential to improve the security of wireless physical layer transmission substantially. However, the core issues to realize such a potential lie in the effective design and optimization of multi-antenna secure transmission mechanisms and secrecy signals.

Fig. 1 Wireless Physical Layer Security

Aiming at solving this core problem, we focus on the enhancement of physical layer security for wireless communications via multiple-antenna technology:

1. We has developed and improved the multi-antenna physical layer security transmission theory, proposed a joint optimization framework and method of single-user MIMO channel information acquisition and secrecy signal for both centralized MIMO and distributed multi-node cooperative systems.

2. We established a secrecy performance evaluation system for multi-user communications in stochastic networks, and proposed adaptive secure transmission method for multi-cell and multi-user MIMO based on stochastic geometry theory.

3. We founded the distributed secure transmission theory with joint cooperative beamforming and jamming, and systematically proposed a series of joint design and optimization methods regarding collaborative beamforming and jamming signals.

The proposed MIMO secrecy signal design and optimization methods are applied to the 96-antenna physical layer secure transmission system platform in the project "Research on the future wireless access physical layer and system security communication technology", which is the first 863 major project on physical layer security in China. The effectiveness of the theoretical methods is verified by prototypes and field tests, and it is verified that the physical layer security of wireless transmission is significantly improved.

Wireless physical layer security has wide applications in various fields, such as in 5G, in Cyber physical system, in Internet of Things, etc.

Microwave Microfluidic Biosensors Involved with Metamaterials

The Department of Information and Communications Engineering

Microwave techniques provide powerful tools to achieve label free and non-invasive analysis of the electrical dielectric permittivity of biological as well as non-biological samples such as tissues, cell suspensions, isopropanol (IPA) and water mixtures, amino acid solutions, and human blood. Integrating microwave techniques with microfluidic techniques which process and manipulate nano-liter to atto-liter volumes of fluids, creates a new subdomain named microwave-microfluidics. This integration opens the possibility for highly efficient and automated nano-liter and sub-nano-liter bio-liquid sensing, characterizing and heating with microwave technology. In our recently work, microwave biosensors were designed involved with metamaterials, which can help to significantly improve the sensitivities and reduce the sizes of biosensors.

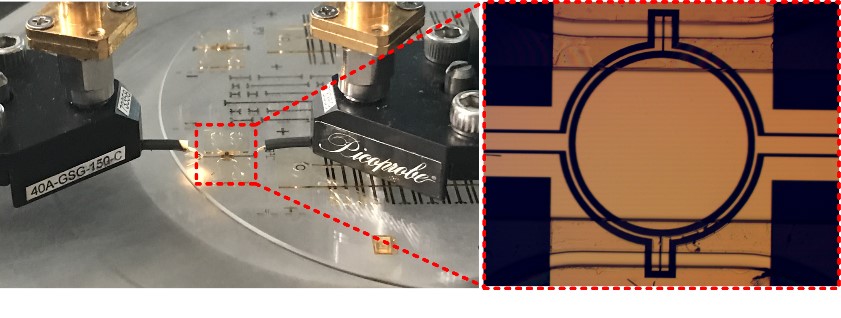

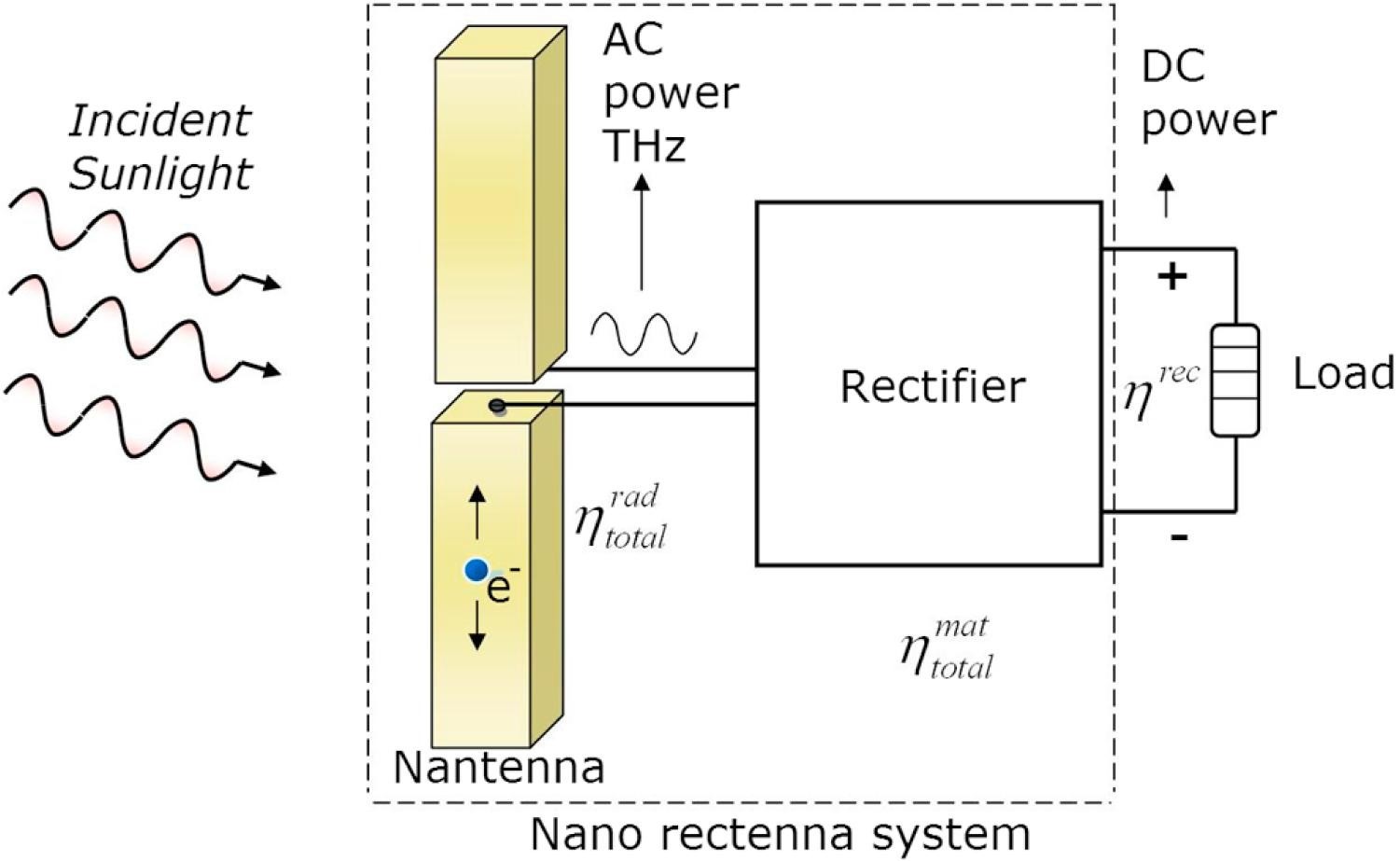

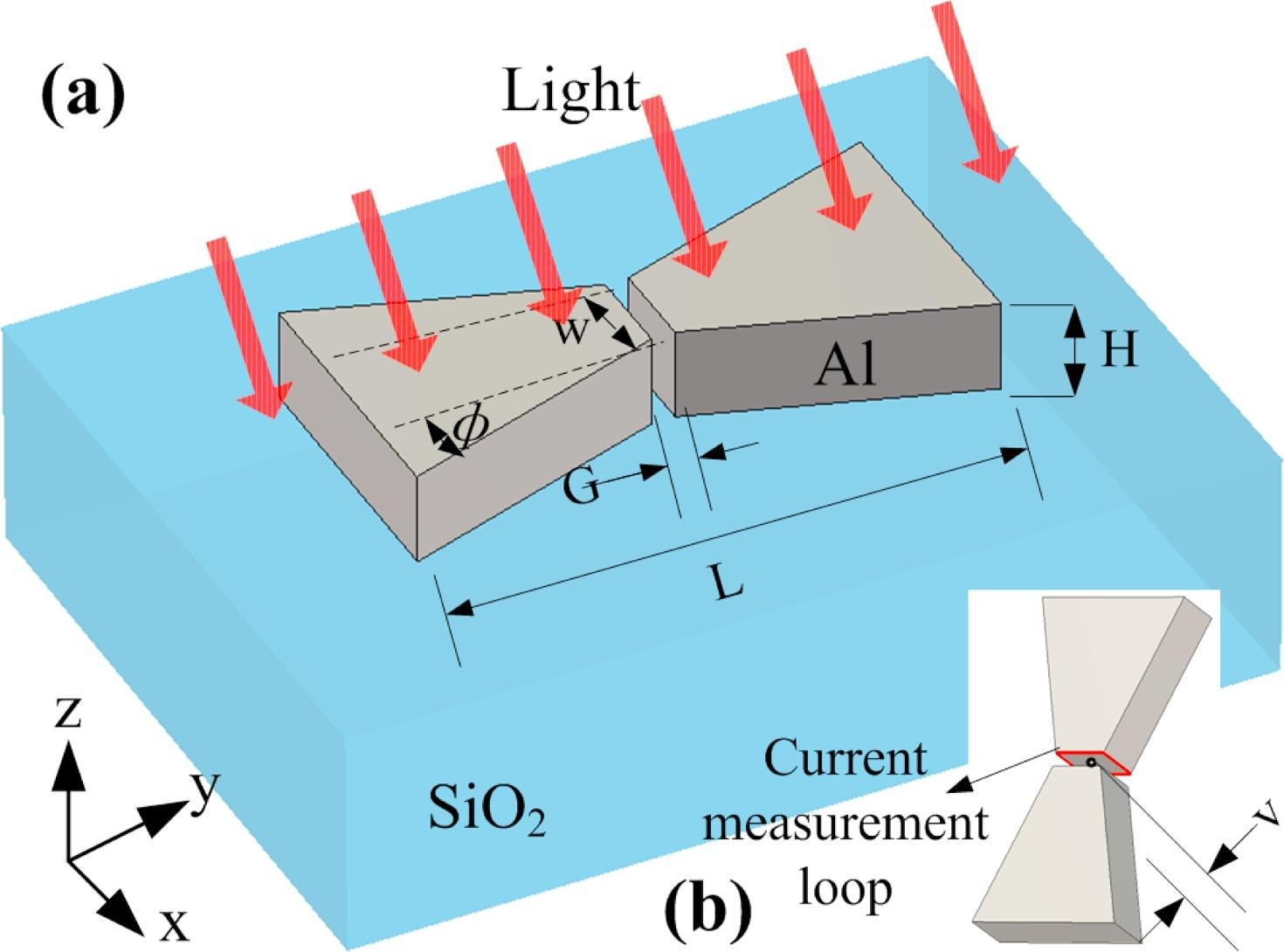

Nano-antennas for Solar Energy Harvesting Applications

The Department of Information and Communications Engineering

The fundamental question whether optical waves can be efficiently converted into electricity using nanometer scale antennas and rectifiers is a highly important research field. Due to the rapid progress of technical ability to manufacture structures at the nanoscale in the recent decades, by some researchers it is claimed that so-called nano-rectennas could harvest more energy from a wider spectrum of sunlight, in this way offering a more efficient and cheaper alternative to traditional silicon solar cells. Once realized, this concept could revolutionize the energy market and partially solve the energy problem of human kind in a completely clean and renewable way. Several topologies of nano-antennas have been studied and their potential efficiencies were compared. Our work investigated to which level the receiving efficiency (including a matched rectifier) can be increased by optimizing the shape and material of a bowtie dipole nanoantenna.

Wearable antenna design based on Metamaterials

The Department of Information and Communications Engineering

Medical and health monitoring has attracted wide attention in modern society. A wireless body area network (WBAN) is a good solution since it can collect the physical data of the human body from distributed sensors and send them to an on/off body processor. As a critical component for the wireless communication, wearable antennas require a different design flowchart compared to normal antennas. Their performance will directly influence the speed and robustness of the data transmission and the energy consumption of the system. Meanwhile, the energy absorption by the human tissues, evaluated through the Specific Absorption Rate, should be limited in order to minimize the health risks in case of long term irradiation. Our recent work systematically analyzed design methods and fabrication technologies for wearable antennas. We are one of the first research groups which strategically combines metamaterials with the design of wearable antennas. Some initial attempts have been made in the past five years. Several textile antennas based on simple metamaterial topologies have been designed and realized. The results have been published in international journals and at conferences, revealing a first glimpse on the possibilities of combining the two fields.

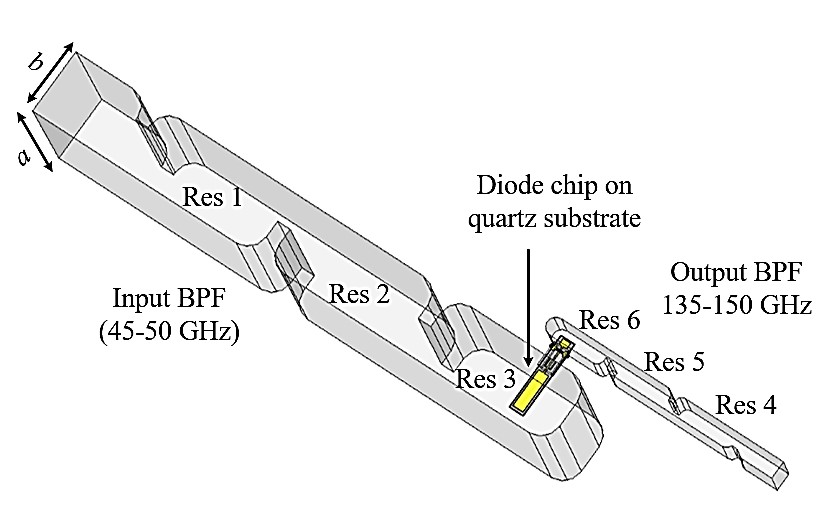

Low-THz non-linear Circuits based on Filtering Matching Networks

The Department of Information and Communications Engineering

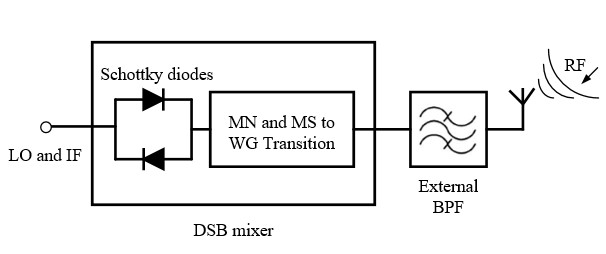

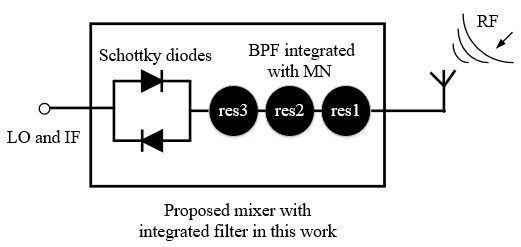

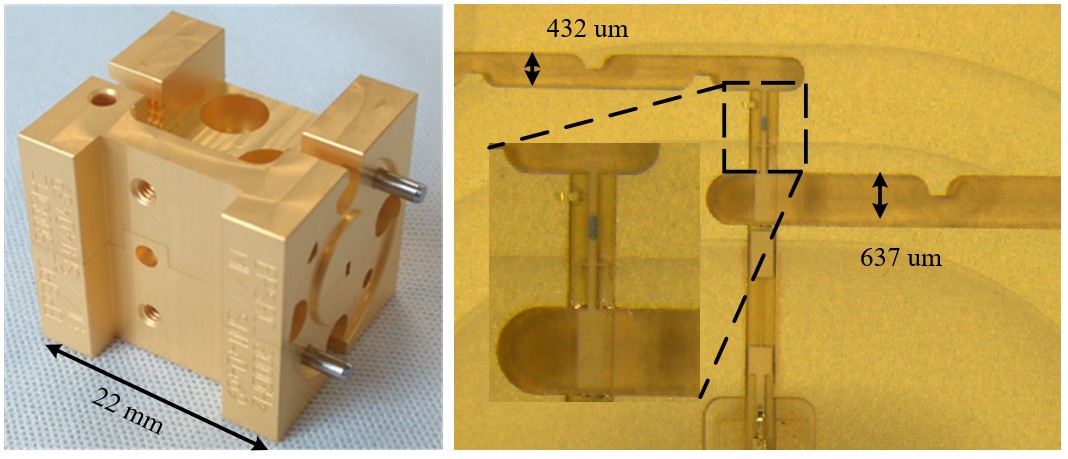

Filters and impedance matching networks are essential and frequently used microwave circuit elements. The conventional approach is to design filters and matching networks separately and then to combine them in series to fulfil the design requirement(s). In this work, filters (described by coupling matrix) providing impedance matching functions are used in the design of a WR-5 band (135-150 GHz) frequency multiplier[1] as well as a WR-3 band (290-310 GHz)mixer[2] based on Schottky diodes, as shown in Fig. 1-2.

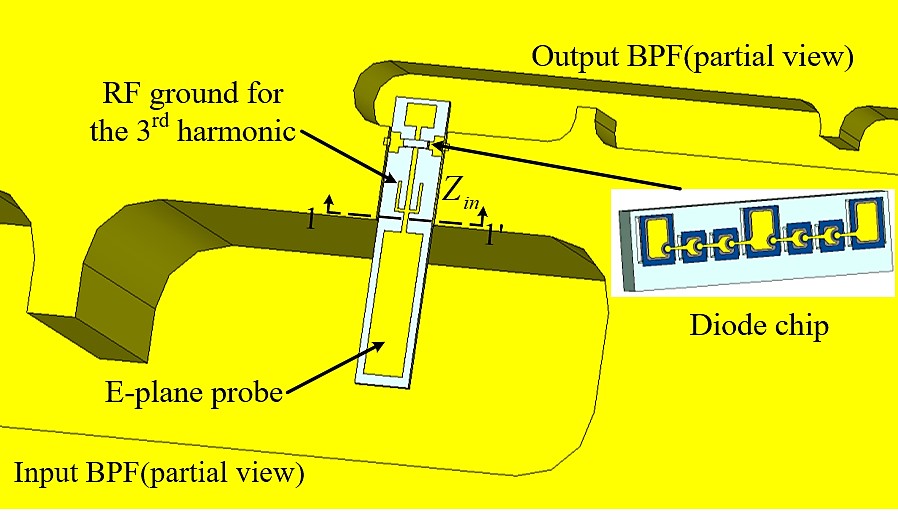

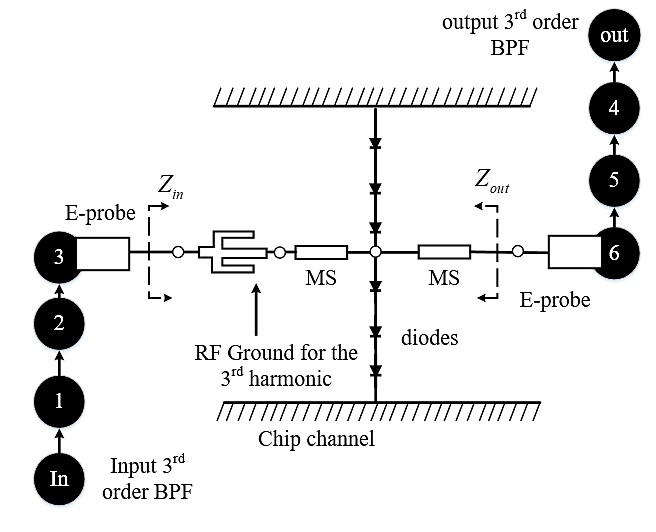

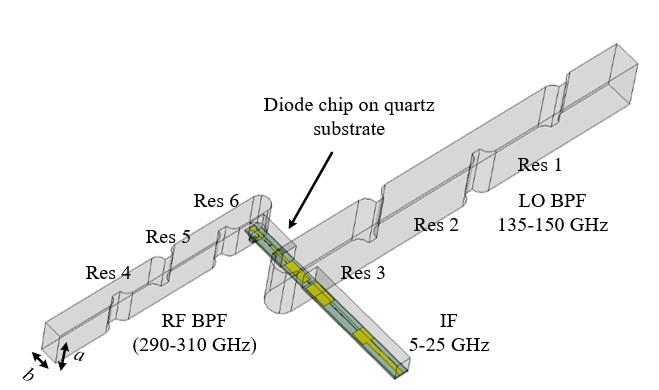

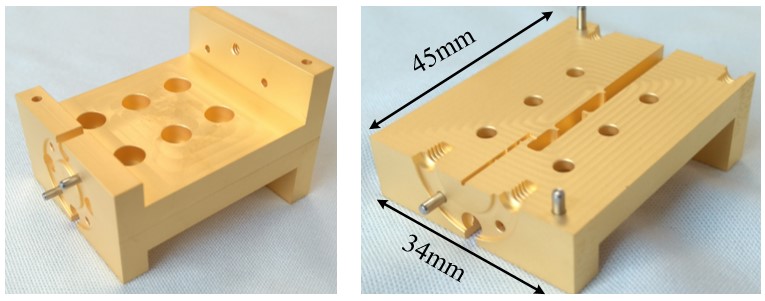

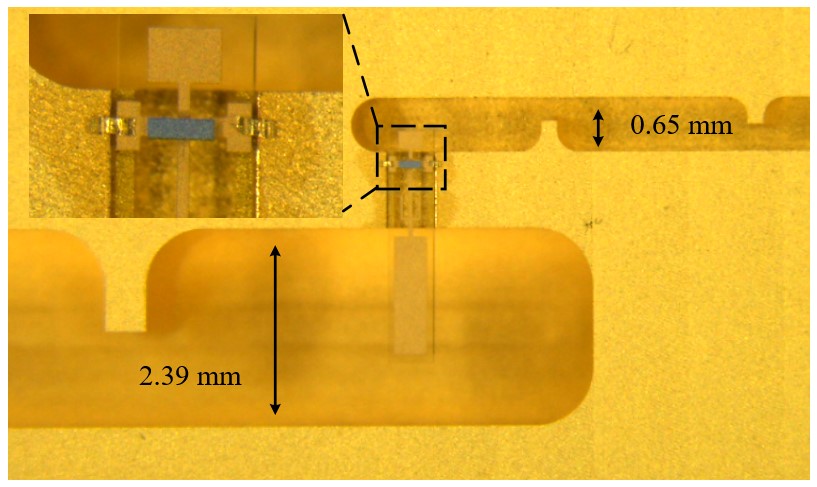

Fig. 1. The proposed frequency tripler using six resonators to perform impedance matching. (a) Three-dimensional model of the tripler. a=4.78 mm, b=2.39 mm. (b) Enlarged view of the microstrip area. (c). Diagrammatic view of the resonators (black filled circles) and the couplings (arrows). The input and output of the diodes are coupled via a microstrip (MS) line and E-plane probe to the 3rd and the 6th resonators.

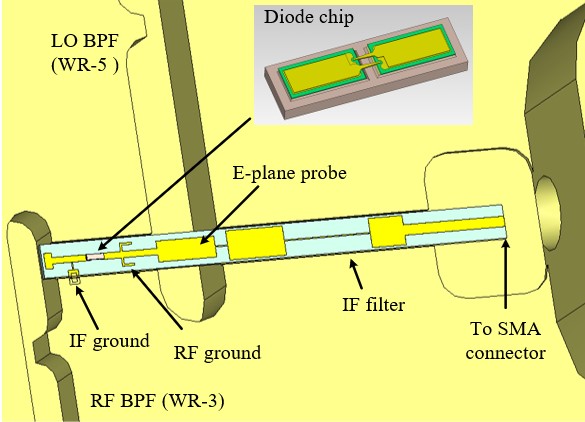

Fig. 2. The proposed image rejection mixer using integrated waveguide filters. (a) Three-dimensional model of the mixer in CST. a=0.864 mm, b=0.432 mm. (b) Enlarged view of the mixer. (c) Comparisons between a conventional SSB mixer and the proposed mixer with integrated filter.

The use of filters to perform impedance matching of these nonlinear devices gives several advantages. Conventionally, matching networks are integrated with the Schottky diodes in microstrip or coplanar line and these can be lossy, especially at high frequencies. For instance, the unloaded quality factor (Qu) of a microstrip resonator, realized at 142.5 GHz by a half wavelength 50 Ω copper transmission line on a 50 µm thick quartz substrate, is calculated using CST as Qu =224. CST predicts that the corresponding WR-5 copper waveguide cavity resonator, operating at TE101 mode, has Qu = 1940. Hence, at high frequencies, it is desirable to move the matching and/or filtering networks from the microstrip/coplanar circuit to lower loss waveguide equivalents. Also, Compared with the conventional approaches, this integrated design leads to a reduced circuit complexity and a smaller circuit size.

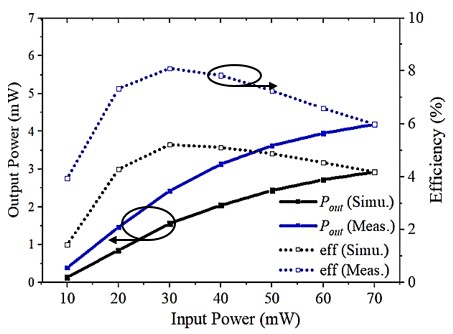

Fig.3 shows the fabricated tripler and the simulated and measured tripler responses. Overall good agreement between simulation and measurement is achieved, especially for the S11 response, where three reflection poles are clearly visible, the archived tripler efficiency is about 5% over the 135-150 GHz band.

!@[10]!@[11]

Figure 3. WR-5 band frequency tripler. (a) Machined tripler blocks. (b) Measurement results

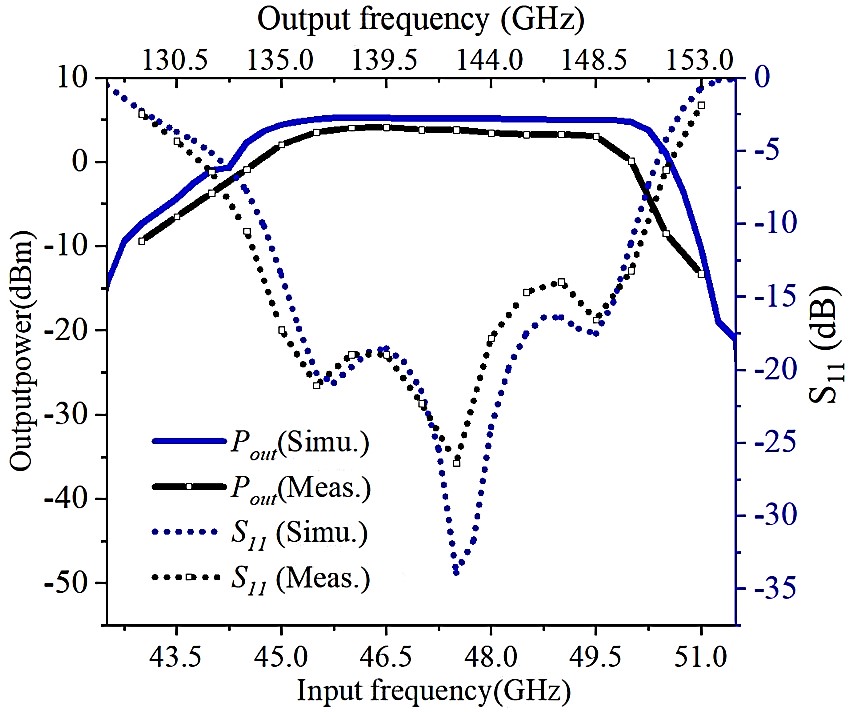

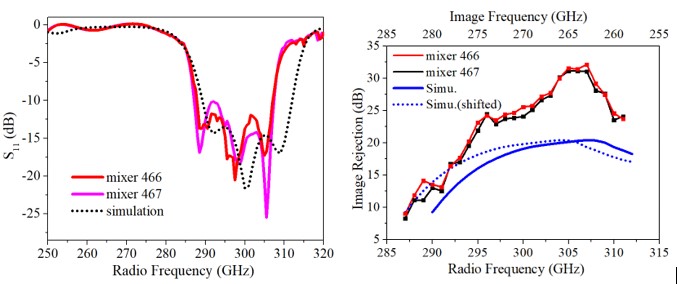

Fig.4 shows the fabricated mixer and the simulated and measured mixer responses. Due to the use of the coupled resonators as the matching network at RF port, the mixer is inherently integrated with filtering function and provides good IM rejection for around 20 dB. The simulated and measured mixer responses are shown in Figure 6. The measured RF S11 is around -12 dB while the image rejection is around 20dB.!@[12]!@[13]

Figure4. WR-3 band SSB mixer. (a) Machined mixer blocks. (b) Measurement results

Over-the-air (OTA) testing of 4G/5G multiple-input multiple-output (MIMO) devices in reverberation chambers and multi-probe anechoic chamber

The Department of Information and Communications Engineering

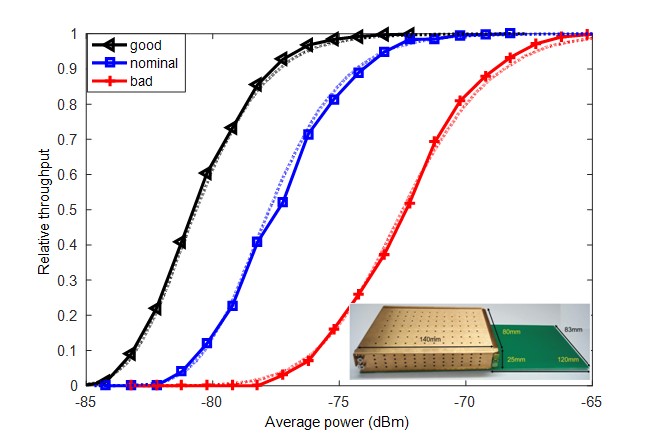

Measurement of multiple-input multiple-output (MIMO) throughput in a reverberation chamber

Normalized throughputs of a mobile phone with CTIA good, nominal, and bad reference MIMO antennas. A photo of the CTIA reference antenna is shown at the lower bottom corner. Solid curves correspond to measurements; dotted curves corresponds to simulations.

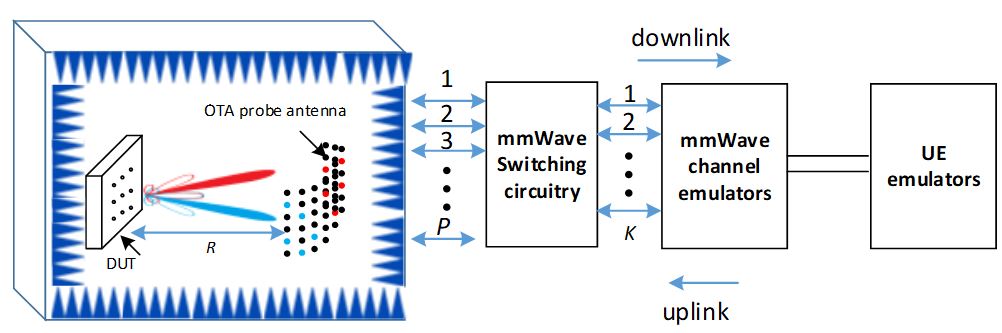

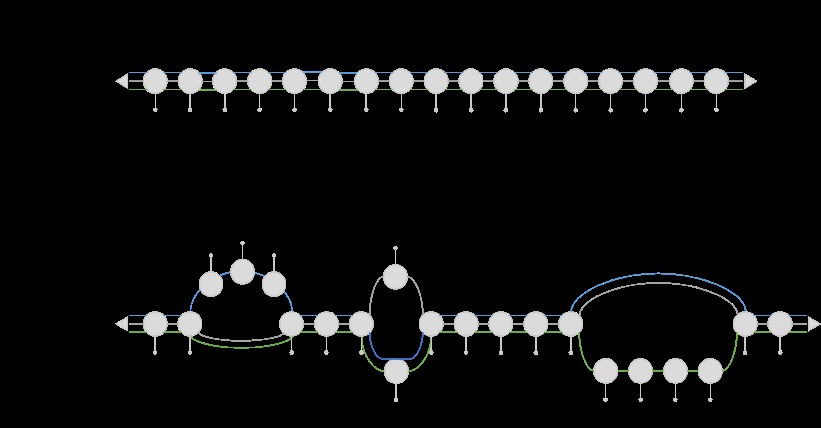

An illustration of the 3D sectored multi-probe anechoic chamber (MPAC) setup for Over-the-air (OTA) testing of 5G millimeter-wave devices. OTA antennas and selected active OTA antennas are denoted by black and colored dots, respectively

Power angular spectra (PAS) of (a) the target channel, (b) the emulated channel using the sectored MPAC.

Algorithm and software development for analyzing genomic data

The School of Cyber Security

Graph Genome is a new genome data structure to present a reference genome. The human reference genome serves as the foundation for sequencing data analysis, but currently only reflects a single consensus haplotype, thus impairing analysis accuracy, especially for samples of populations not Caucasian. Graph genome then has been proposed to tackle this issue by incorporating common variations into the human reference genome. A graph genome implementation could reflect common genome variations of other population based on the human reference genome. However, the existing implementation of graph genome is at the commencement of development and it is unable to sustain large-scale genomic. We then propose to reduce time and space complexity by using allele frequency and gene phasing. Filtering variant by allele frequency could help to reduce the number of nodes while gene phasing will merge several variants as a haplotype and further reduce the number of paths on a graph genome.

AX-CNV is an advanced project that builds NGS-based assays as the first-tier cytogenetic diagnostic test in Clinical Laboratory Improvement Amendments (CLIA) certified clinical genomics laboratories. Chromosomal abnormalities have been associated with a large number of constitutional disorders and chromosomal microarray (CMA) serves the standard assays for cytogenetic diagnoses, which has its limitation leading to low negative predictive value. NGS is conceivable to replace CMA and the barrier of it is a stable and robust Bioinformatics pipeline. The preliminary result yields the same sensitivity of CMA and higher precision than it.

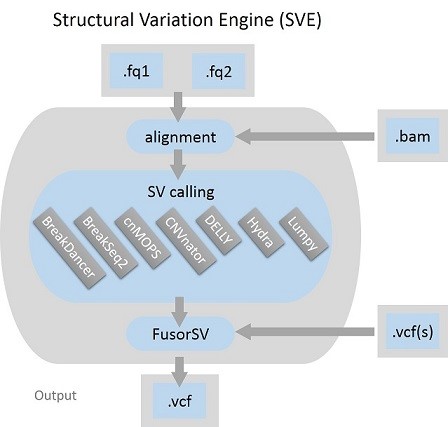

SVE/FusorSV is a python script based execution engine for structural variation detection and can be used for any levels of data inputs, raw FASTQs, aligned BAMs, or variant call format (VCFs), and generates a unified VCF as its output. By design, SVE consists of alignment, realignment and the ensemble of state-of-the-art SV-calling algorithms by default. They are BreakDancer, BreakSeq, cnMOPS, CNVnator, DELLY, Hydra and LUMPY. FusorSV is also embedded that is a data mining approach to assess performance and merge callsets from an ensemble of SV-calling algorithms. Github: https://github.com/TheJacksonLaboratory/SVE

MOSAIK is a short-read mapper that aligns next generation sequence short reads against a reference genome. MOSAIK is used from population re-sequencing projects through medical sequencing studies, and make significant contributions. Github: https://github.com/wanpinglee/MOSAIK

A 13.56 MHz, 94.1% Peak Efficiency CMOS Active Rectifier with Adaptive Delay Time Control for Wireless Power Transmission Systems

The School of Microelectronics

In this paper, an adaptive delay time control (ADTC) based CMOS active rectifier is proposed. Compared with previous active rectifiers, power-hungry comparators are eliminated in this structure. Instead, optimal on/off time of the power switches is generated by two current controlled delay lines (CCDLs), which enables drastic power reduction of the active rectifier. In addition, the multiple-pulsing problem is eliminated due to the introduced control mechanism. The high-precision on/off control at picosecond level precision removes the reverse current of rectifier and guarantees high voltage conversion rate (VCR) and power conversion efficiency (PCE). The active rectifier is fabricated with a standard 0.18 μm CMOS process. The experimental results show that the quiescent power consumption of the rectifier is less than 230μW. The current conduction angle reaches the optimal state under different input and load conditions because of the adaptive adjustment. The peak PCE is 94.1% at the output power of 10.63mW. The PCE is enhanced by 6.7% compared with the previous design. The maximum output power of 34.1 mW is achieved with input AC amplitude of 2.5 V. The proposed low-power high-efficiency active rectifier gives a favorable solution for the wireless power transmission systems.

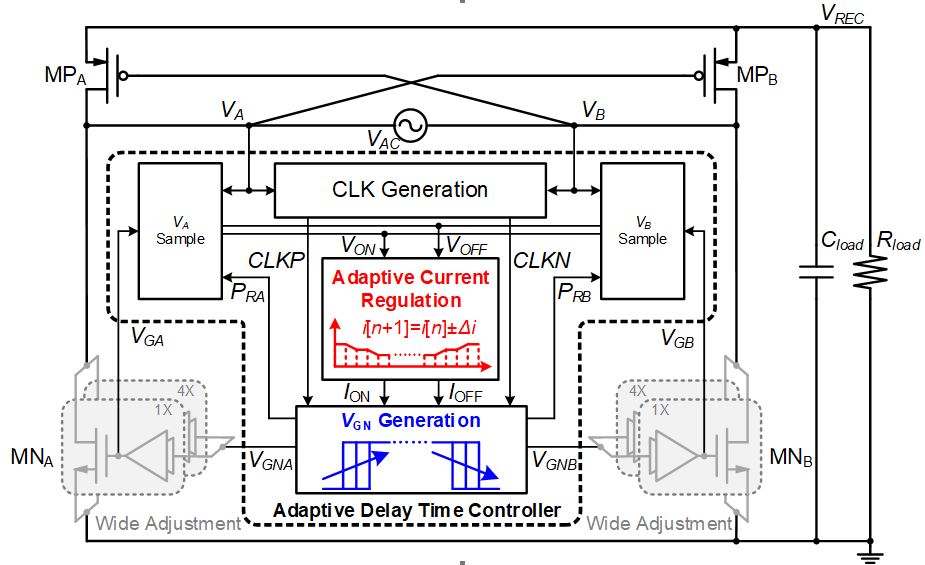

Fig. 1 Architecture of the proposed active rectifier with Adaptive Delay Time Control

The architecture of the active rectifier is shown in Fig. 3. It consists of the main power stage and the proposed adaptive delay time controller. The CLK generation module generates the two-phase clock signals CLKP and CLKN by comparing the magnitudes of the input node VA and VB voltages. VGN generation module delays the rising edge of CLKP twice to obtain the control signal VGNA of the power NMOS MNA, and delays the rising edge of CLKN twice to obtain VGNB of the power NMOS MNB. The sampling circuit then samples the input node VA and VB at the time when the power MOS is turned on (off) and obtains the sampled voltage at the turn-on (turn-off) time VON (VOFF). The adaptive current regulator (ACR) uses the voltages VON and VOFF to adjust the control currents ION and IOFF by successive approximation, so that VON and VOFF are zero when the rectifier is stable, and the rectifier achieves zero current switching.

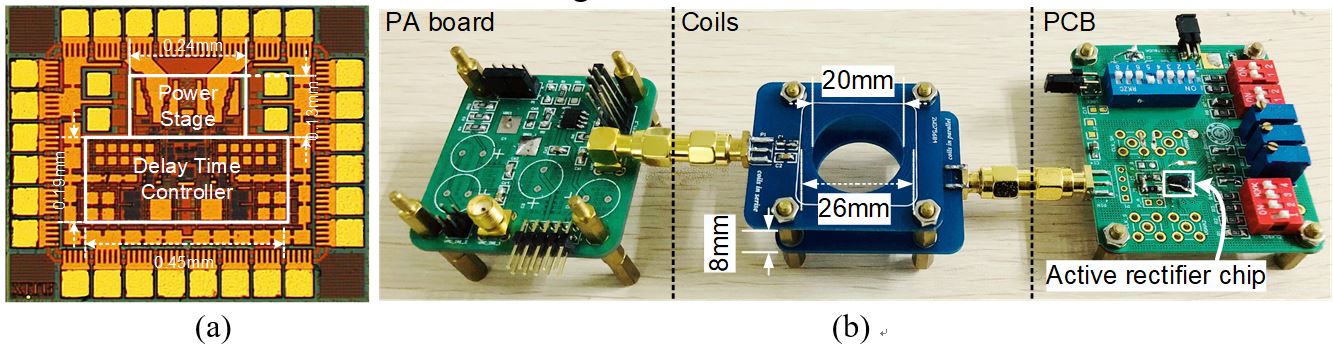

Fig. 2 Chip photograph of the active rectifier and the test platform of the Wireless Power Transfer system.

Fig. 2 (a) shows the chip photograph. It occupies 0.78 mm×0.64 mm including PADs, and the core area is 0.117 mm2. Fig. 2 (b) shows the test platform, which consists of a PA board, two coupling coils and a test printed circuit board (PCB) with the designed chip.

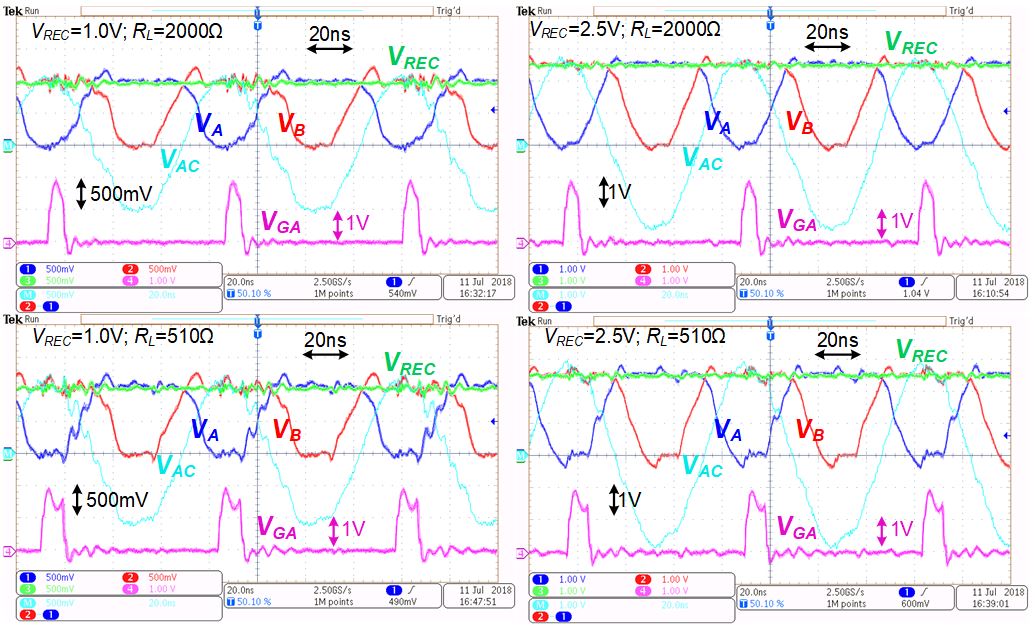

Fig. 3 Measured transient waveforms of VA, VB, VAC (calculated), VREC and VGA under different input voltage and load conditions.

Fig.3 shows the measured transient waveforms of the input nodes (VA, VB, VAC), the output node (VREC) and the gate signal (VGA) of MNA when VREC varies from 1.0 V to 2.5 V, and the load resistance RL varies from 2000 Ω to 500 Ω. VGA keeps optimal at different input and load conditions and no multiple pulse is generated, which show the effectiveness of the proposed ADTC and the efficacy of successive approximation algorithm.

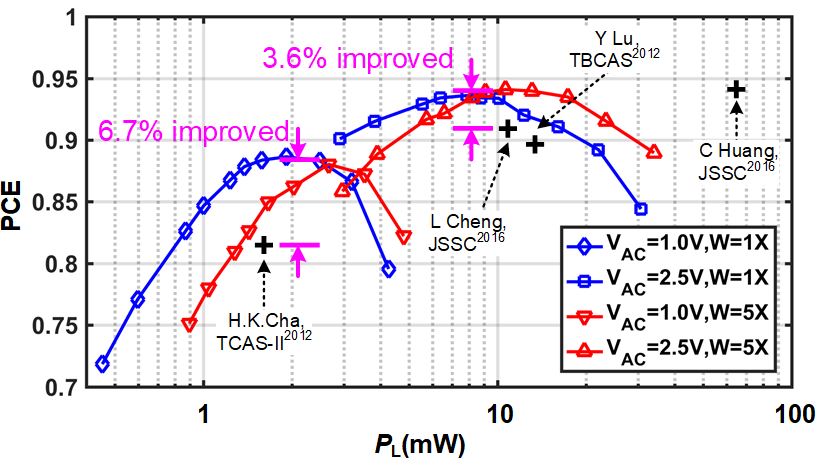

Fig. 4 Measured PCE versus PL under different VAC and gate width Ws, and the comparison with previous state-of-arts.

Measured PCE of the rectifier versus load power PL under different VAC and gate widths W is shown in Fig. 4. The envelope of the PCE is higher than 90% when PL changes from 2 mW to 30 mW. The peak PCE of 94.1% is obtained at VAC=2.5 V and PL=10.63 mW with W=5X. Compared with previous work, the peak PCE in this work increases by at least 3.6% near 10 mW. When the load power decreases down to 2 mW level, the PCE of the proposed rectifier increases by 6.7%.

A Battery-Less, 255 nA Quiescent Current Temperature Sensor with Voltage Regulator Fully Powered by Harvesting Ambient Vibrational Energy

The School of Microelectronics

Abstract & Overview

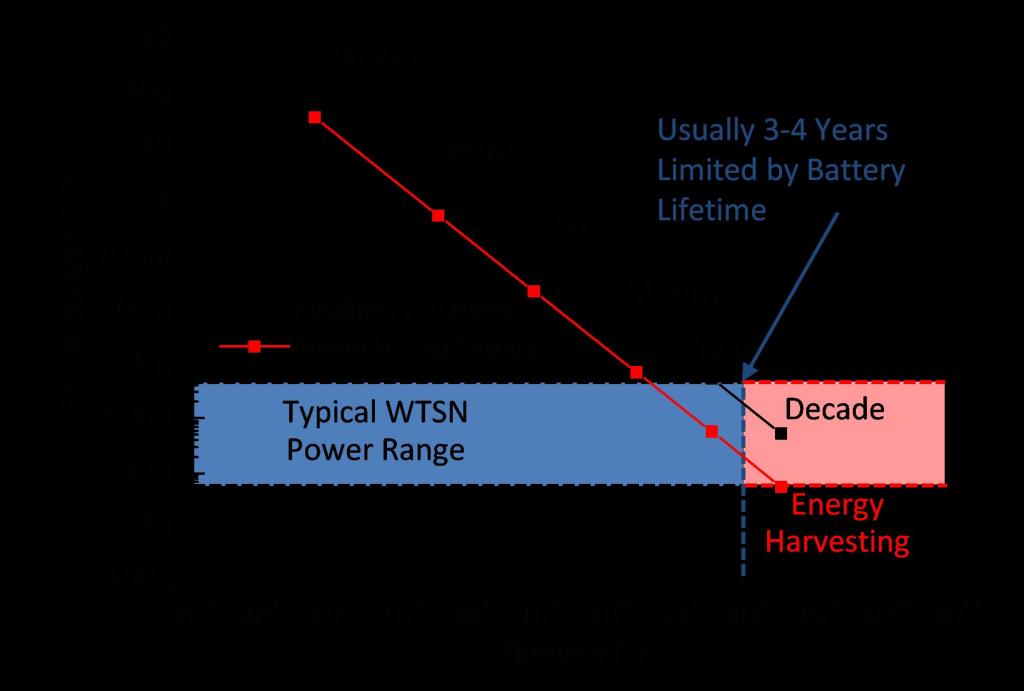

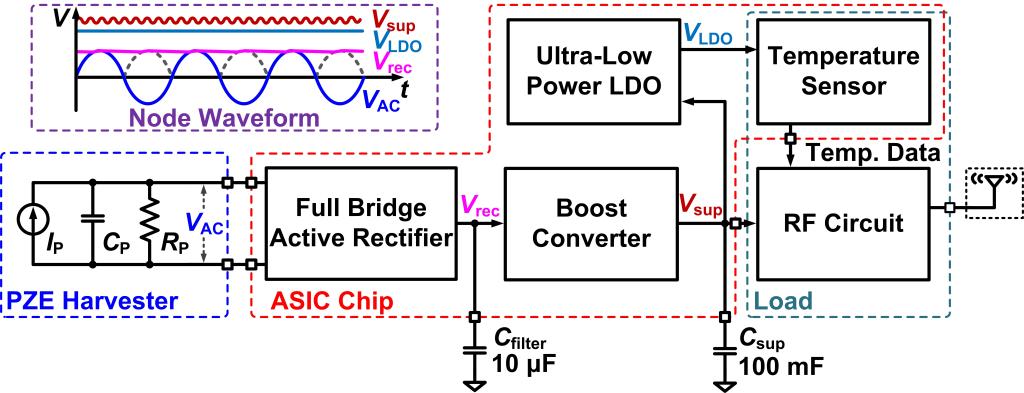

We report on the circuit design and experimental demonstration of a battery-less, self-powering temperature sensor node, powered entirely by a piezoelectric (PZE) ambient vibrational energy harvester, and enabled by a novel ultra-low-power application-specific integrated circuit (ASIC). The ASIC collects and stores energy generated by the PZE transducer into a supercapacitor, powers both the on-ASIC temperature sensor and the on-board RF module for wireless temperature sensing applications. The ASIC is fabricated using a standard 0.5 μm CMOS process. The quiescent current in the entire ASIC is only 55 nA for energy harvesting, and 255 nA when temperature sensing is further included. Such self-powering temperature sensors can help enable low-cost transformation of today’s ordinary buildings into energy-efficient smart buildings.

Motivation & Background

Wireless sensor networks are key elements for energy-efficient smart buildings and infrastructures. Battery-powered sensors, however, have high maintenance costs due to the mandatory replacements of batteries. Vibrational energy harvesting provides a pathway towards battery-less, self-powering wireless sensors.

Circuit Implementation

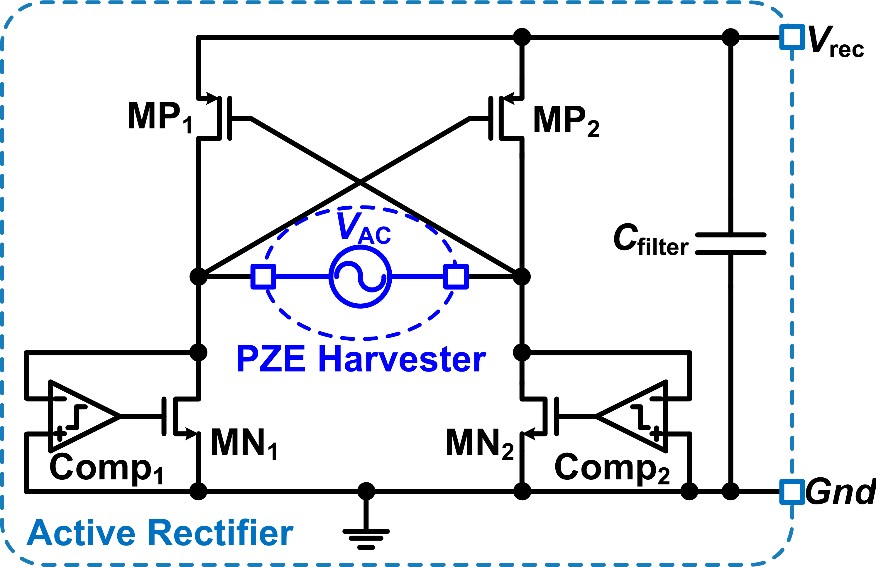

Rectifier: (i) includes four power MOSFETs and two comparators; (ii) full bridge; (iii) active driving for NMOS; (iv) cross-coupled driving for PMOS; (v) high voltage conversion efficiency and fully integrated.

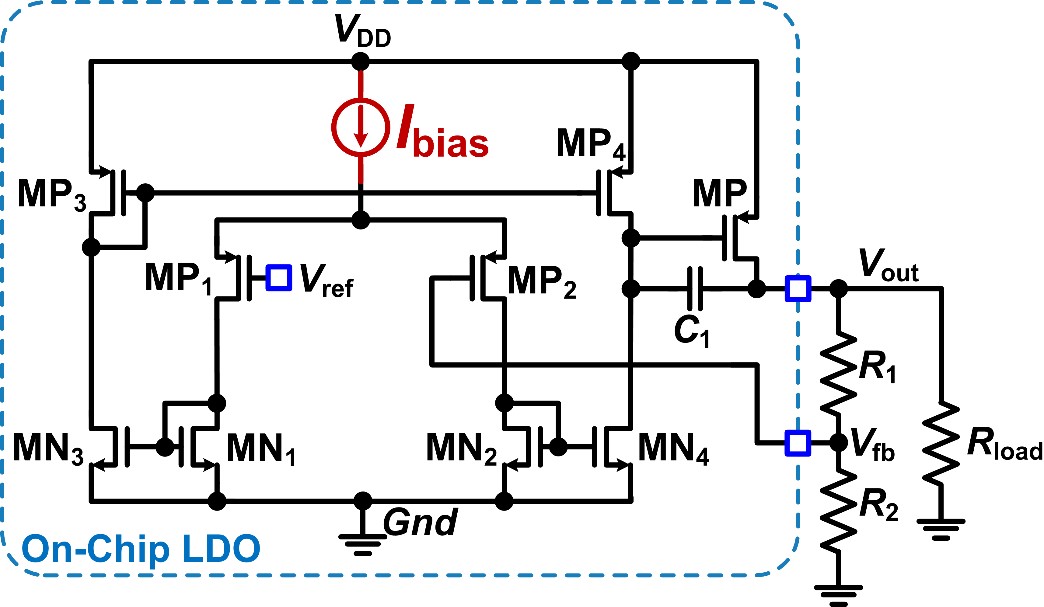

LDO: (i) 750 pA quiescent bias current; (ii) >85° PM @10 nA ~ 100 μA load current; (iii) 50 mV dropout voltage; (iv) low noise supply for sensors.

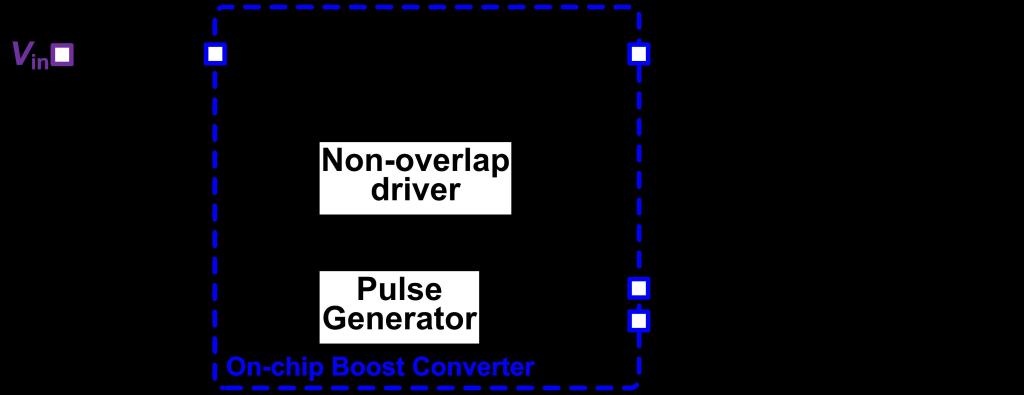

Boost Converter: (i) hysteresis controlled; (ii) ULQ consumption; (iii) step-up converter; (iv) impedance matching for MPPT.

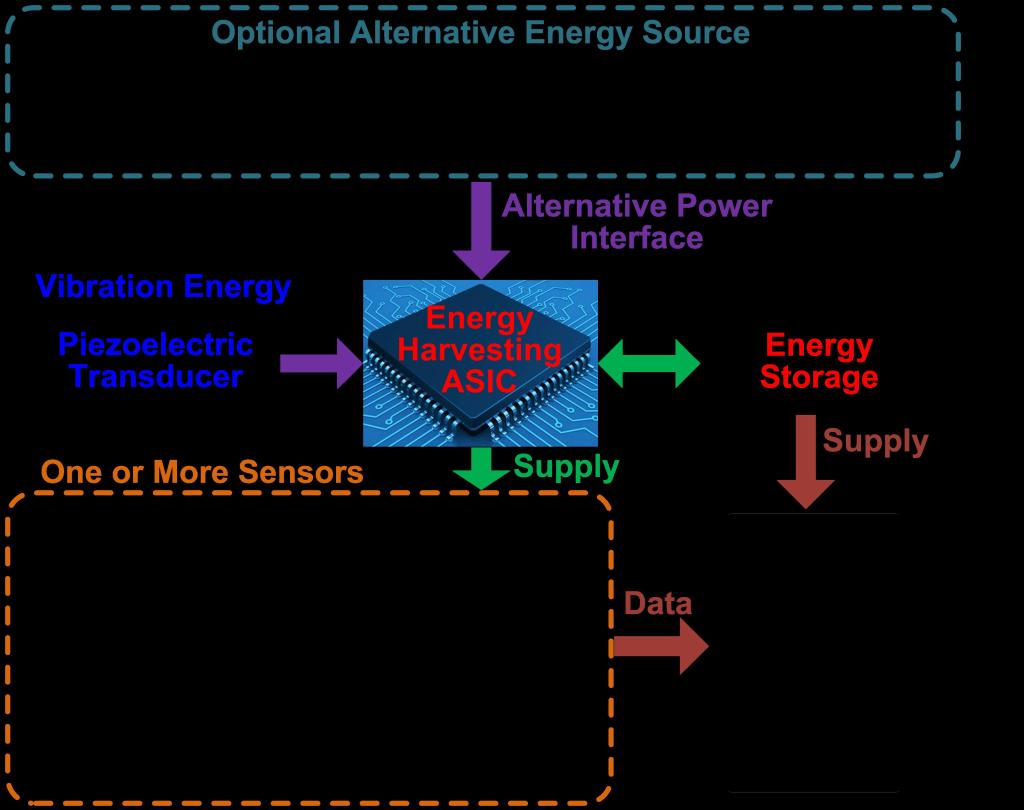

System Diagram

System Architecture:

Energy source

ASIC

- Energy harvester

- Voltage regulator

- Temperature sensor

Energy storage unit

RF transceiver

The energy harvester converts vibration energy from a PZE transducer to electricity energy and stores in storage unit (supercapacitor/rechargeable battery). The ASIC powers sensors with tunable voltage. The RF module monitors the voltage level and determines the rate for data transmission.

ASIC Design & Operation

ASIC Modules: (i) a full bridge active rectifier, which rectifies the AC input from the PZE vibration energy harvester; (ii) an ultra-low-power boost converter, which uses the rectified energy input to charge the supercapacitor (the energy storage unit), and maintains a high charging efficiency; (iii) an ultra-low quiescent current low dropout (LDO) regulator that provides a clean supply voltage (using the energy stored in the supercapacitor) with low ripples and low noise, for powering the front-end on-ASIC temperature sensor; (iv) a monolithic nano-Watt (nW) temperature sensor integrated on-ASIC that outputs the temperature signal in digital form for transmission.

Timing Diagram:

When Vrec>Vth, on-chip bias circuit begins to provide bias current and reference voltage for the comp. and amp. in all other ASIC modules. As the boost converter and LDO start operating;

When Vsup>VtH, wireless transmission enable, until Vsup

ASIC Design Summary:

Include five modules;

A small capacitor Cfilter is used to smooth the output voltage of rectifier;

A large super capacitor Csup is adapted as energy storage unit to supply for load;

No need additional source, bias or reference;

RF transceiver;

Tests & Results

Testing Platform:

Energy harvester;

Vibration stage;

PCB with ASIC (fab. by 0.5 μm CMOS);

Oscilloscope and probes.

Temperature Sensor Testing:

Fully powered by PZE vibrational energy

2 hour period test (15 min/temp. data)

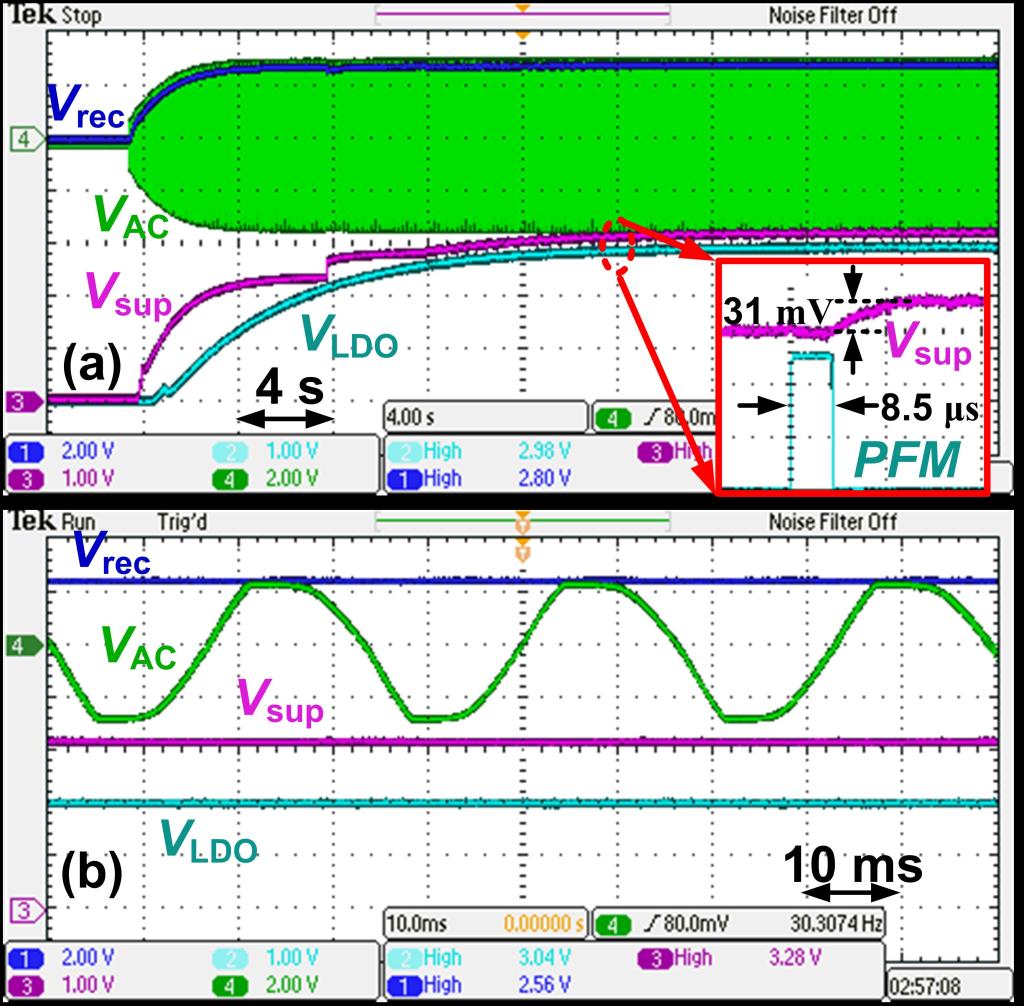

Rectifier Testing:

Startup period, Vth ≈ 1.5 V for bias circuit

w/ and w/o active driving, >96% VCR

Boost Converter and LDO Testing:

Startup period, >20 s

PFM, 8.5 μs on-time

Steady state

- Vsup=3.3 V with 31 mV ripple

- VLDO=3.0 V!@[10]

Conclusions & Perspectives

Demonstrate a monolithically integrated CMOS temperature sensor on ASIC

ASIC fully powered by harvesting ambient vibration energy

ASIC fabricated by standard 0.5 μm CMOS process

55 nA quiescent current consumption of the power management

200 nA quiescent current consumption of the temperature sensor

Up to 96% voltage conversion rate of the rectifier

High power conversion efficiency

Low-cost

Acknowledgements: We thank support from the National Natural Science Foundation of China (61504105), and the China Scholarship Council (CSC No. 201506285143).